com.io7m.r2 0.3.0-SNAPSHOT Documentation

- 1. Package Information

- 2. Design And Implementation

- 2.1. Conventions

- 2.2. Concepts

- 2.3. Coordinate Systems

- 2.4. Meshes

- 2.5. Transforms

- 2.6. Instances

- 2.7. Render Targets

- 2.8. Shaders

- 2.9. Shaders: Instance

- 2.10. Shaders: Light

- 2.11. Stencils

- 2.12. Lighting

- 2.13. Lighting: Directional

- 2.14. Lighting: Spherical

- 2.15. Lighting: Projective

- 2.16. Shadows

- 2.17. Shadows: Variance Mapping

- 2.18. Deferred Rendering

- 2.19. Deferred Rendering: Geometry

- 2.20. Deferred Rendering: Lighting

- 2.21. Deferred Rendering: Position Reconstruction

- 2.22. Forward rendering (Translucency)

- 2.23. Normal Mapping

- 2.24. Logarithmic Depth

- 2.25. Environment Mapping

- 2.26. Stippling

- 2.27. Generic Refraction

- 2.28. Filter: Fog

- 2.29. Filter: Screen Space Ambient Occlusion

- 2.30. Filter: Emission

- 2.31. Filter: FXAA

- 3. API Documentation

Package Information

Orientation

Features

- A deferred rendering core for opaque objects.

- A forward renderer, supporting a subset of the features of the deferred renderer, for rendering translucent objects.

- A full dynamic lighting system, including variance shadow mapping. The use of deferred rendering allows for potentially hundreds of dynamic lights per scene.

- Ready-to-use shaders providing surfaces with a wide variety of effects such as normal mapping, environment-mapped reflections, generic refraction, surface emission, mapped specular highlights, etc.

- A variety of postprocessing effects such as box blurring, screen-space ambient occlusion (SSAO), fast approximate antialiasing (FXAA), color correction, bloom, etc. Effects can be applied in any order.

- Explicit control over all resource loading and caching. For all transient resources, the programmer is required to provide the renderer with explicit pools, and the pools themselves are responsible for allocating and loading resources.

- Extensive use of static types. As with all io7m packages, there is extreme emphasis on using the type system to make it difficult to use the APIs incorrectly.

- Portability. The renderer will run on any system supporting OpenGL 3.3 and Java 8.

- A scene graph. The renderer expects the programmer to provide a set of instances (with associated shaders) and lights once per frame, and the renderer will obediently draw exactly those instances. This frees the programmer from having to interact with a clumsy and type-unsafe object-oriented scene graph as with other 3D engines, and from having to try to crowbar their own program's data structures into an existing graph system.

- Spatial partitioning. The renderer knows nothing of the world the programmer is trying to render. The programmer is expected to have done the work of deciding which instances and lights contribute to the current image, and to provide only those lights and instances for the current frame. This means that the programmer is free to use any spatial partitioning system desired.

- Input handling. The renderer knows nothing about keyboards, mice, joysticks. The programmer passes an immutable snapshot of a scene to the renderer, and the renderer returns an image. This means that the programmer is free to use any input system desired without having to painfully integrate their own code with an existing input system as with other 3D engines.

- Audio. The renderer makes images, not sounds. This allows programmers to use any audio system they want in their programs.

- Skeletal animation. The input to the renderer is a set of triangle meshes in the form of vertex buffer objects. This means that the programmer is free to use any skeletal animation system desired, providing that the system is capable of producing vertex buffer objects of the correct type as a result.

- Model loading. The input to the renderer is a set of triangle meshes in the form of vertex buffer objects. This means that the programmer is free to use any model loading system desired, providing that the system is capable of producing vertex buffer objects of the correct type as a result.

- Future proofing. The average lifetime of a rendering system is about five years. Due to the extremely rapid pace of advancement in graphics hardware, the methods use to render graphics today will bear almost no relation to those used five years into the future. The r2 package is under no illusion that it will still be relevant in a decade's time. It is designed to get work done today, using exactly those techniques that are relevant today. It will not be indefinitely expanded and grown organically, as this would directly contradict the goal of having a minimalist and correct rendering system.

- OpenGL ES 2 support. The ES 2 standard was written as a reaction to the insane committee politics that plagued the OpenGL 2.* standards. It is crippled to the point that it essentially cannot support almost any of the rendering techniques present in the r2 package, and is becoming increasingly irrelevant as the much saner ES 3 is adopted by hardware vendors.

Installation

Source compilation

$ mvn -C clean install

Maven

Regular releases are made to the Central Repository, so it's possible to use the com.io7m.r2 package in your projects with the following Maven dependencies:

<dependency> <groupId>com.io7m.r2</groupId> <artifactId>io7m-r2-main</artifactId> <version>0.3.0-SNAPSHOT</version> </dependency>

License

All files distributed with the com.io7m.r2 package are placed under the following license:

Copyright © 2016 <code@io7m.com> http://io7m.com Permission to use, copy, modify, and/or distribute this software for any purpose with or without fee is hereby granted, provided that the above copyright notice and this permission notice appear in all copies. THE SOFTWARE IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

Design And Implementation

- 2.1. Conventions

- 2.2. Concepts

- 2.3. Coordinate Systems

- 2.4. Meshes

- 2.5. Transforms

- 2.6. Instances

- 2.7. Render Targets

- 2.8. Shaders

- 2.9. Shaders: Instance

- 2.10. Shaders: Light

- 2.11. Stencils

- 2.12. Lighting

- 2.13. Lighting: Directional

- 2.14. Lighting: Spherical

- 2.15. Lighting: Projective

- 2.16. Shadows

- 2.17. Shadows: Variance Mapping

- 2.18. Deferred Rendering

- 2.19. Deferred Rendering: Geometry

- 2.20. Deferred Rendering: Lighting

- 2.21. Deferred Rendering: Position Reconstruction

- 2.22. Forward rendering (Translucency)

- 2.23. Normal Mapping

- 2.24. Logarithmic Depth

- 2.25. Environment Mapping

- 2.26. Stippling

- 2.27. Generic Refraction

- 2.28. Filter: Fog

- 2.29. Filter: Screen Space Ambient Occlusion

- 2.30. Filter: Emission

- 2.31. Filter: FXAA

Conventions

Overview

This section attempts to document the mathematical and typographical conventions used in the rest of the documentation.

Mathematics

Rather than rely on untyped and ambiguous mathematical notation, this documentation expresses all mathematics and type definitions in strict Haskell 2010 with no extensions. All Haskell sources are included along with the documentation and can therefore be executed from the command line GHCi tool in order to interactively check results and experiment with functions.

When used within prose, functions are usually referred to using fully qualified notation, such as (Vector3f.cross n t). This is the application of the cross function defined in the Vector3f module, to the arguments n and t.

Concepts

- 2.2.1. Overview

- 2.2.2. Renderer

- 2.2.3. Render Target

- 2.2.4. Geometry Buffer

- 2.2.5. Light Buffer

- 2.2.6. Mesh

- 2.2.7. Transform

- 2.2.8. Instance

- 2.2.9. Light

- 2.2.10. Light Clip Group

- 2.2.11. Light Group

- 2.2.12. Shader

- 2.2.13. Material

Overview

This section attempts to provide a rough overview of the concepts present in the r2 package. Specific implementation details, mathematics, and other technical information is given in later sections that focus on each concept in detail.

Renderer

A renderer is a function that takes an input of some type and produces an output to a render target.

The renderers expose an interface of stateless functions from inputs to outputs. That is, the renderers should be considered to simply take input and produce return images as output. In reality, because the Java language is not pure and because the code is required to perform I/O in order to speak to the GPU, the renderer functions are not really pure. Nevertheless, for the sake of ease of use, lack of surprising results, and correctness, the renderers at least attempt to adhere to the idea of pure functional rendering! This means that the renderers are very easy to integrate into any existing system: They are simply functions that are evaluated whenever the programmer wants an image. The renderers do not have their own main loop, they do not have any concept of time, do not remember any images that they have produced previously, do not maintain any state of their own, and simply write their results to a programmer-provided render target. Passing the same input to a renderer multiple times should result in the same image each time.

Render Target

A render target is a rectangular region of memory allocated on the GPU that can accept the results of a rendering operation. The programmer typically allocates one render target, passes it to a renderer along with a renderer-specific input value, and the renderer populates the given render target with the results. The programmer can then copy the contents of this render target to the screen for viewing, pass it on to a separate filter for extra visual effects, use it as a texture to be applied to objects in further rendered images, etc.

Geometry Buffer

A geometry buffer is a specific type of render target that contains the surface attributes of a set of rendered instances. It is a fundamental part of deferred rendering that allows lighting to be efficiently calculated in screen space, touching only those pixels that will actually contribute to the final rendered image.

Light Buffer

A light buffer is a specific type of render target that contains the summed light contributions for each pixel in the currently rendered scene.

Mesh

A mesh is a collection of vertices that define a polyhedral object, along with a list of indices that describe how to make triangles out of the given vertices.

Transform

A transform moves coordinates in one coordinate space to another. Typically, a transform is used to position and orient a mesh inside a visible set.

Instance

An instance is essentially an object or group of objects that can be rendered. Instances come in several forms: single, batched, and billboarded.

A batched instance consists of a reference to a mesh and an array of transforms. The results of rendering a batched instance are the same as if a single instance had been created and rendered for each transform in the array. The advantage of batched instances is efficiency: Batched instances are submitted to the GPU for rendering in a single draw call. Reducing the total number of draw calls per scene is an important optimization on modern graphics hardware, and batched instances provide a means to achieve this.

A billboarded instance is a further specialization of a batched instance intended for rendering large numbers of objects that always face towards the observer. Billboarding is a technique that is often used to render large numbers of distant objects in a scene: Rather than incur the overhead of rendering lots of barely-visible objects at full detail, the objects are replaced with billboarded sprites at a fraction of the cost. There is also a significant saving in the memory used to store transforms, because a billboarded sprite need only store a position and scale as opposed to a full transform matrix per rendered object.

Light

A light describes a light source within a scene. There are many different types of lights, each with different behaviours. Lights may or may not cast shadows, depending on their type. All lighting in the r2 package is completely dynamic; there is no support for static lighting in any form. Shadows are exclusively provided via shadow mapping, resulting in efficient per-pixel shadows.

Light Clip Group

A light clip group is a means of constraining the contributions of groups of lights to a provided volume.

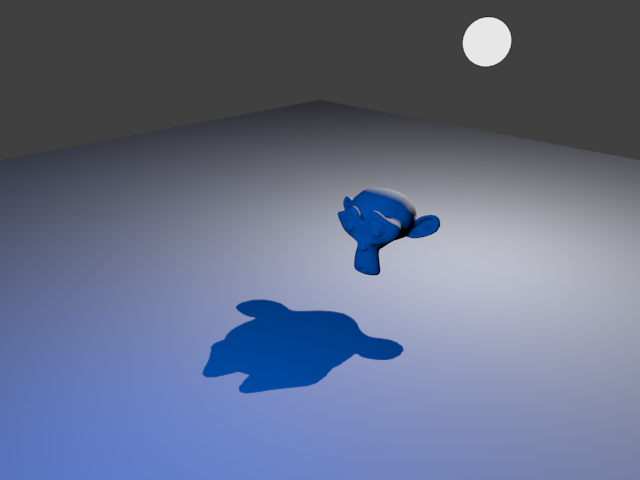

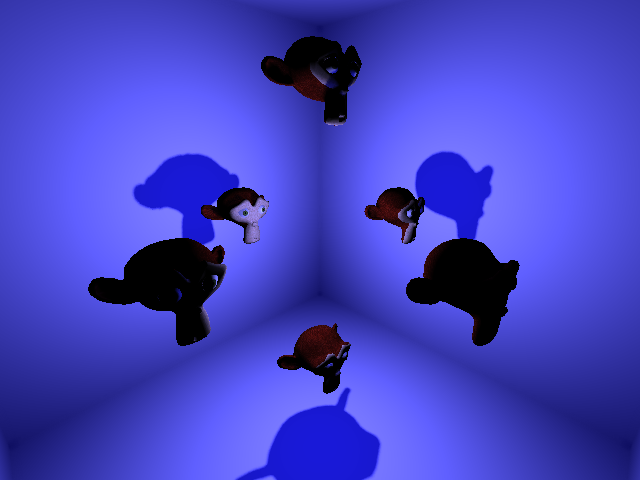

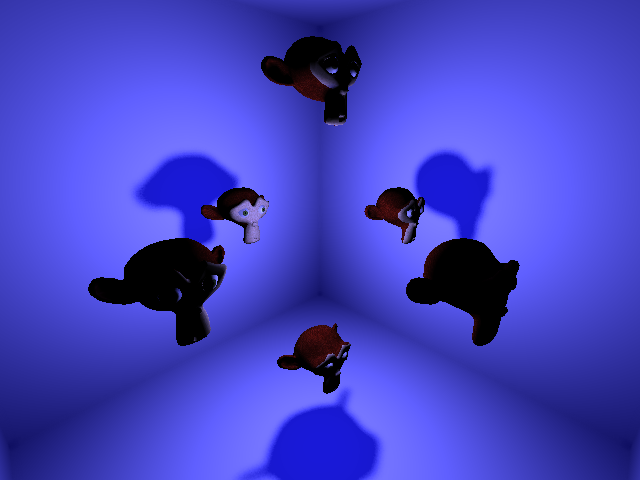

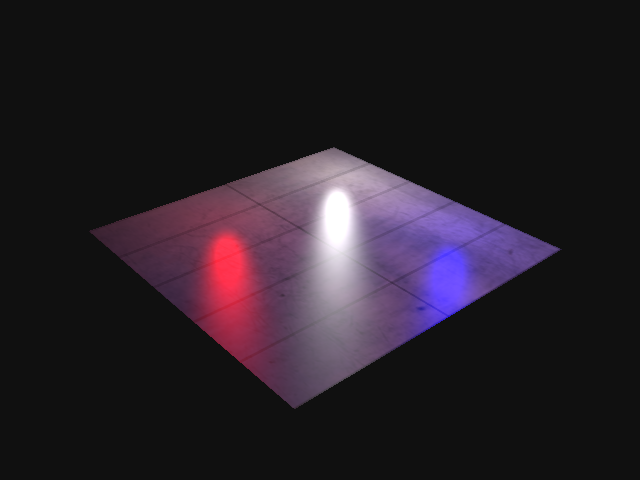

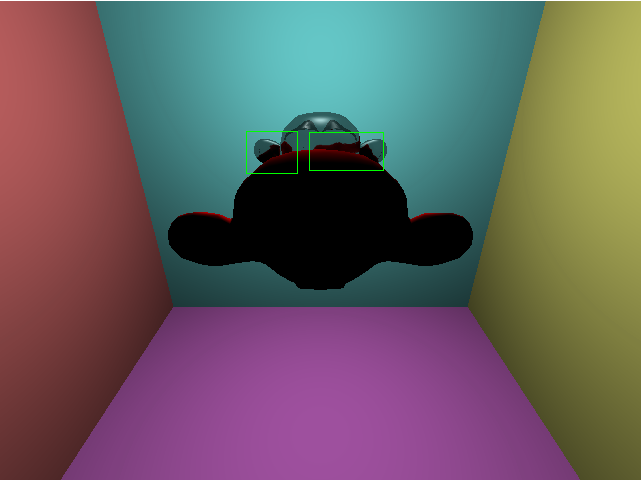

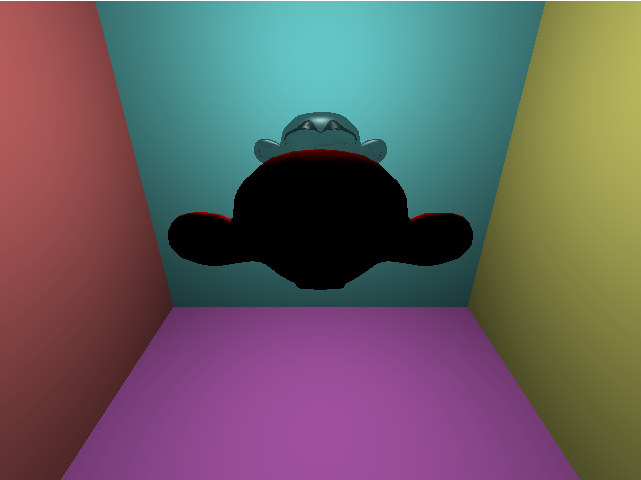

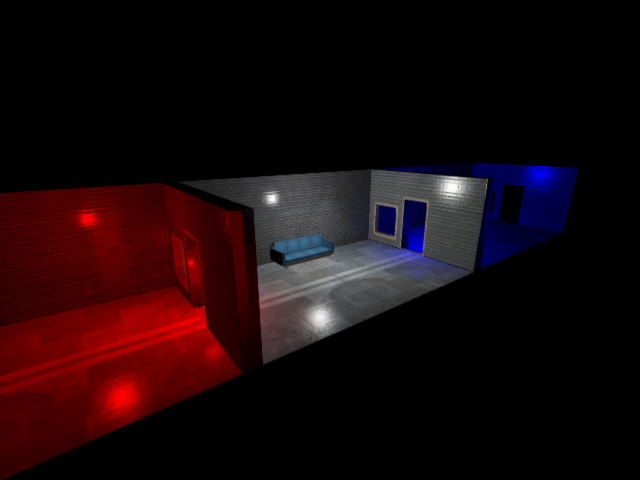

Because, like most renderers, the r2 package implements so-called local illumination, lights that do not have explicit shadow mapping enabled are able to bleed through solid objects:

Enabling shadow mapping for every single light source would be prohibitively expensive [3], but for some scenes, acceptable results can be achieved by simply preventing the light source from affecting pixels outside of a given clip volume.

Light Group

A light group is similar to a light clip group in that is intended to constrain the contributions of a set of lights. A light group instead requires the cooperation of a renderer that can mark groups of instances using the stencil component of the current geometry buffer. At most 15 light groups can be present in a given scene, and for a given light group n, only instances in group n will be affected by lights in group n. By default, if a group is not otherwise specified, all lights and instances are rendered in group 1.

Shader

A shader is a small program that executes on the GPU and is used to produce images. The r2 package provides a wide array of general-purpose shaders, and the intention is that users of the package will not typically have to write their own [2].

The package roughly divides shaders into categories. Single instance shaders are typically used to calculate and render the surface attributes of single instances into a geometry buffer. Batched instance shaders do the same for batched instances. Light shaders render the contributions of light sources into a light buffer. There are many other types of shader in the r2 package but users are generally not exposed to them directly.

Coordinate Systems

- 2.3.1. Conventions

- 2.3.2. Object Space

- 2.3.3. World Space

- 2.3.4. Eye Space

- 2.3.5. Clip Space

- 2.3.6. Normalized-Device Space

- 2.3.7. Screen Space

Conventions

This section attempts to describe the mathematical conventions that the r2 package uses with respect to coordinate systems. The r2 package generally does not deviate from standard OpenGL conventions, and this section does not attempt to give a rigorous formal definition of these existing conventions. It does however attempt to establish the naming conventions that the package uses to refer to the standard coordinate spaces [10].

The r2 package uses the jtensors package for all mathematical operations on the CPU, and therefore shares its conventions with regards to coordinate system handedness. Important parts are repeated here, but the documentation for the jtensors package should be inspected for details.

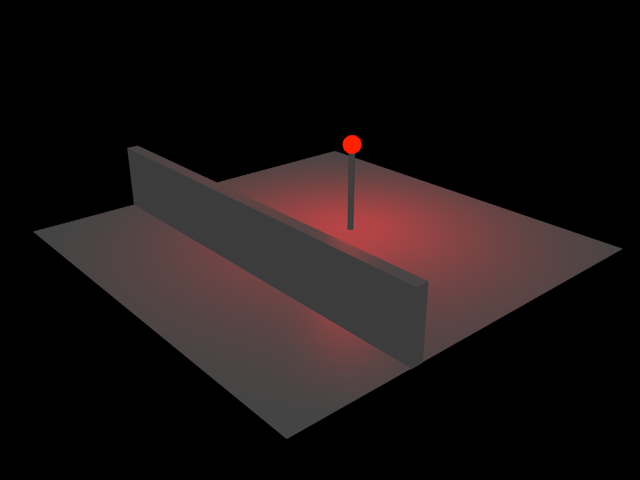

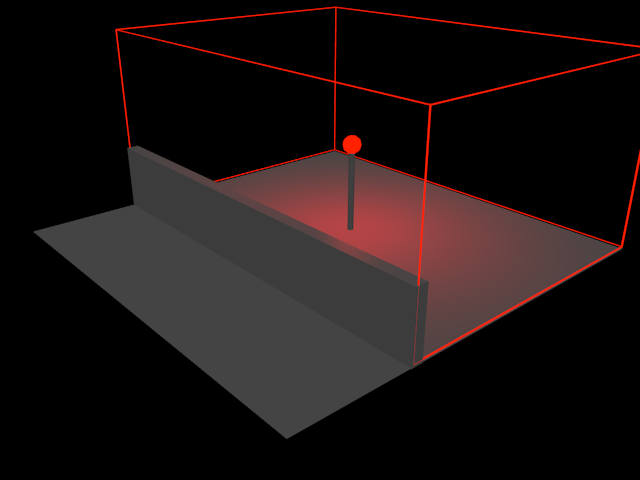

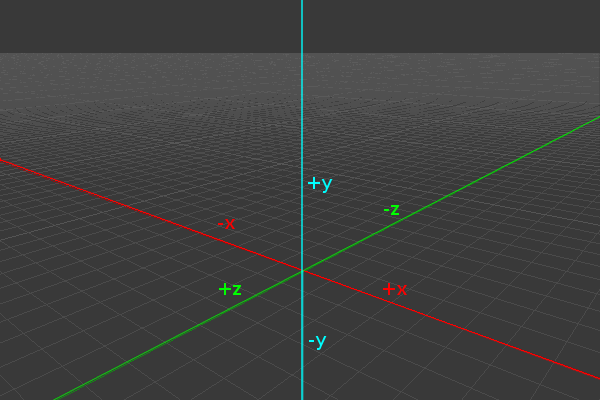

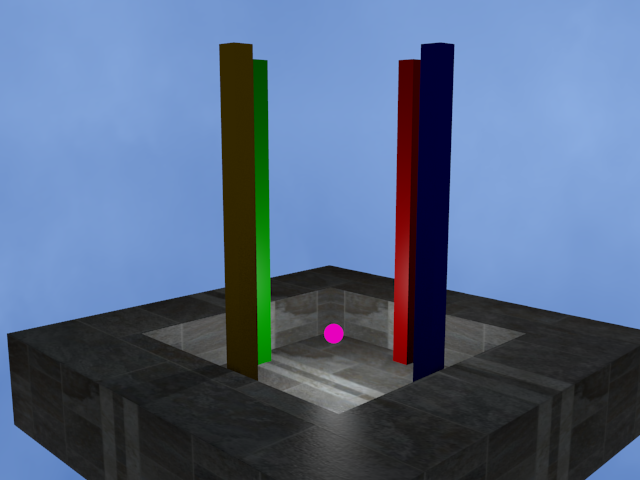

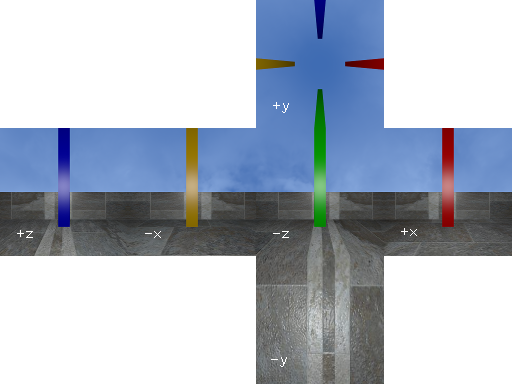

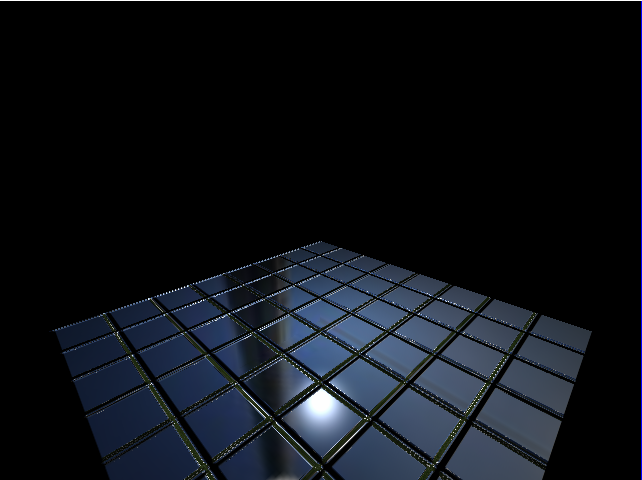

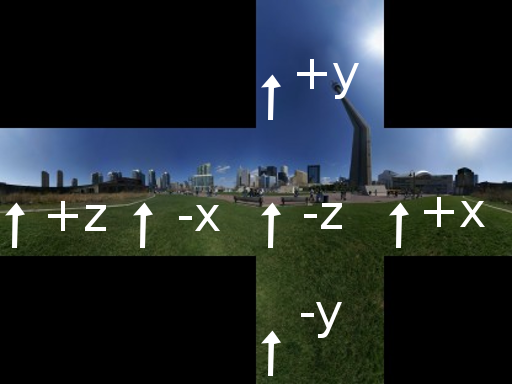

Any of the matrix functions that deal with rotations assume a right-handed coordinate system. This matches the system conventionally used by OpenGL (and most mathematics literature) . A right-handed coordinate system assumes that if the viewer is standing at the origin and looking towards negative infinity on the Z axis, then the X axis runs horizontally (left towards negative infinity and right towards positive infinity) , and the Y axis runs vertically (down towards negative infinity and up towards positive infinity). The following image demonstrates this axis configuration:

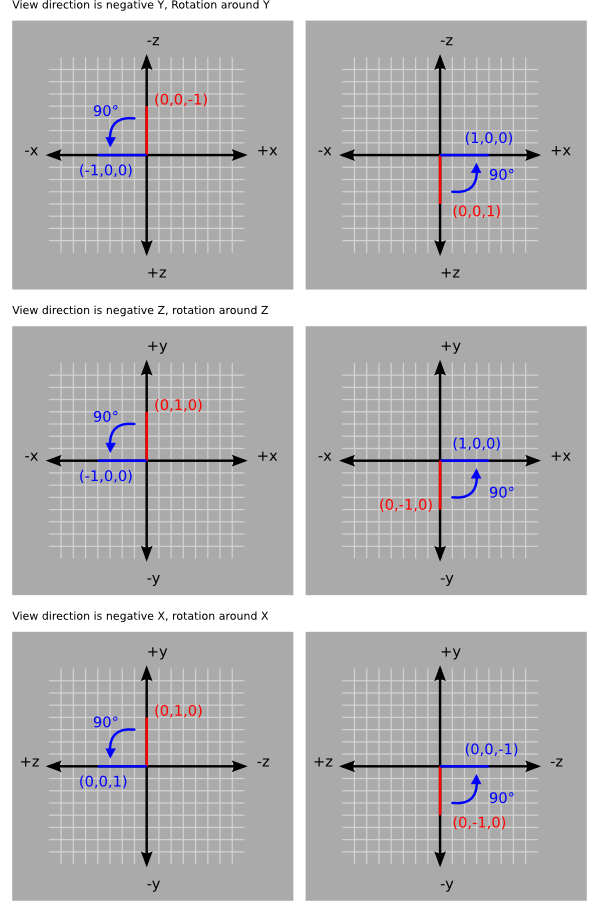

The jtensors package adheres to the convention that a positive rotation around an axis represents a counter-clockwise rotation when viewing the system along the negative direction of the axis in question.

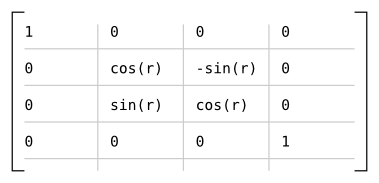

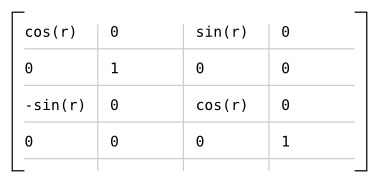

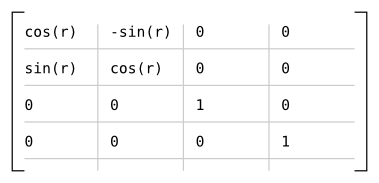

The package uses the following matrices to define rotations around each axis:

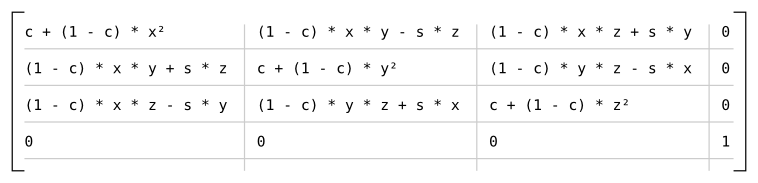

Which results in the following matrix for rotating r radians around the axis given by (x, y, z), assuming s = sin(r) and c = cos(r):

Object Space

Object space is the local coordinate system used to describe the positions of vertices in meshes. For example, a unit cube with the origin placed at the center of the cube would have eight vertices with positions expressed as object-space coordinates:

cube = {

(-0.5, -0.5, -0.5),

( 0.5, -0.5, -0.5),

( 0.5, -0.5, 0.5),

(-0.5, -0.5, 0.5),

(-0.5, 0.5, -0.5),

( 0.5, 0.5, -0.5),

( 0.5, 0.5, 0.5),

(-0.5, 0.5, 0.5)

}In other rendering systems, object space is sometimes referred to as local space, or model space.

In the r2 package, object space is represented by the R2SpaceObjectType.

World Space

In order to position objects in a scene, they must be assigned a transform that can be applied to each of their object space vertices to yield absolute positions in so-called world space.

As an example, if the unit cube described above was assigned a transform that moved its origin to (3, 5, 1), then its object space vertex (-0.5, 0.5, 0.5) would end up at (3 + -0.5, 5 + 0.5, 1 + 0.5) = (2.5, 5.5, 1.5) in world space.

In the r2 package, a transform applied to an object produces a 4x4 model matrix. Multiplying the model matrix with the positions of the object space vertices yields vertices in world space.

Note that, despite the name, world space does not imply that users have to store their actual world representation in this coordinate space. For example, flight simulators often have to transform their planet-scale world representation to an aircraft relative representation for rendering to work around the issues inherent in rendering extremely large scenes. The basic issue is that the relatively low level of floating point precision available on current graphics hardware means that if the coordinates of objects within the flight simulator's world were to be used directly, the values would tend to be drastically larger than those that could be expressed by the available limited-precision floating point types on the GPU. Instead, simulators often transform the locations of objects in their worlds such that the aircraft is placed at the origin (0, 0, 0) and the objects are positioned relative to the aircraft before being passed to the GPU for rendering. As a concrete example, within the simulator's world, the aircraft may be at (1882838.3, 450.0, 5892309.0), and a control tower nearby may be at (1883838.5, 0.0, 5892809.0). These coordinate values would be far too large to pass to the GPU if a reasonable level of precision is required, but if the current aircraft location is subtracted from all positions, the coordinates in aircraft relative space of the aircraft become (0, 0, 0) and the coordinates of the tower become (1883838.5 - 1882838.3, 0.0 - 450.0, 5892809.0 - 5892309.0) = (1000.19, -450.0, 500.0). The aircraft relative space coordinates are certainly small enough to be given to the GPU directly without risking imprecision issues, and therefore the simulator would essentially treat aircraft relative space and r2 world space as equivalent [11].

In the r2 package, world space is represented by the R2SpaceWorldType.

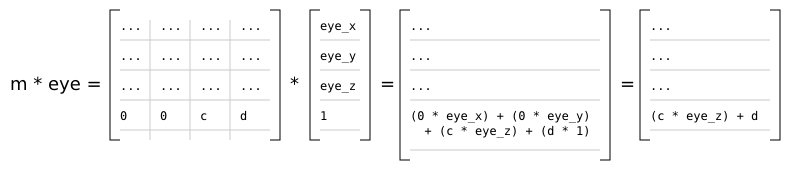

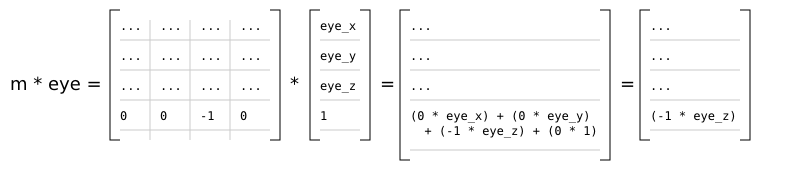

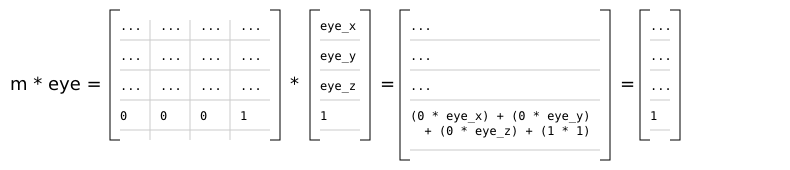

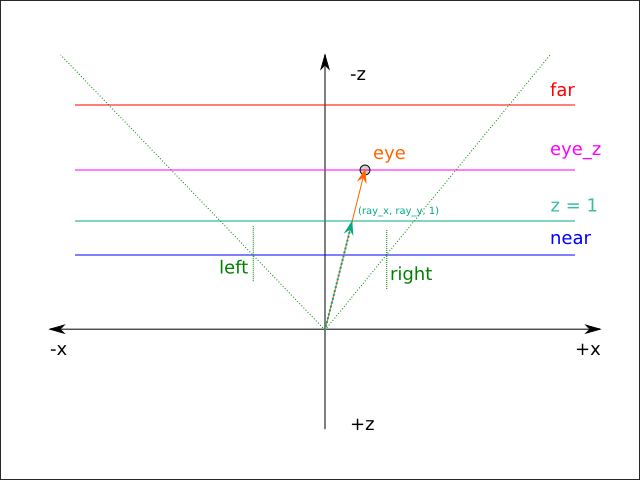

Eye Space

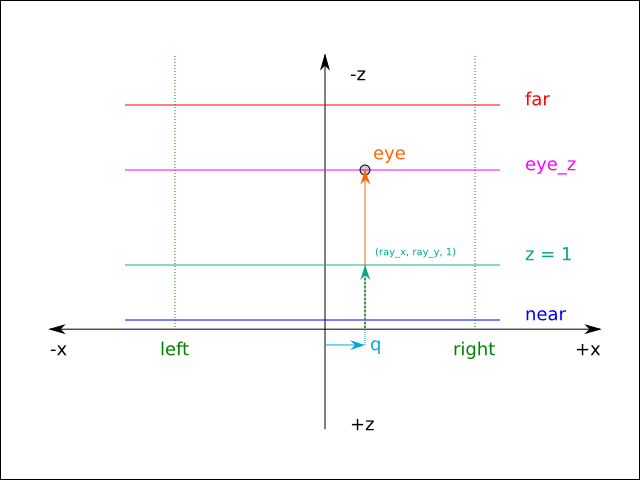

Eye space represents a coordinate system with the observer implicitly fixed at the origin (0.0, 0.0, 0.0) and looking towards infinity in the negative Z direction.

The main purpose of eye space is to simplify the mathematics required to implement various algorithms such as lighting. The problem with implementing these sorts of algorithms in world space is that one must constantly take into account the position of the observer (typically by subtracting the location of the observer from each set of world space coordinates and accounting for any change in orientation of the observer). By fixing the orientation of the observer towards negative Z, and the position of the observer at (0.0, 0.0, 0.0), and by transforming all vertices of all objects into the same system, the mathematics of lighting are greatly simplified. The majority of the rendering algorithms used in the r2 package are implemented in eye space.

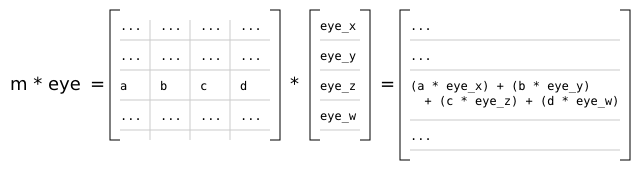

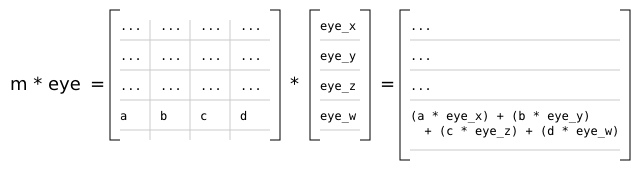

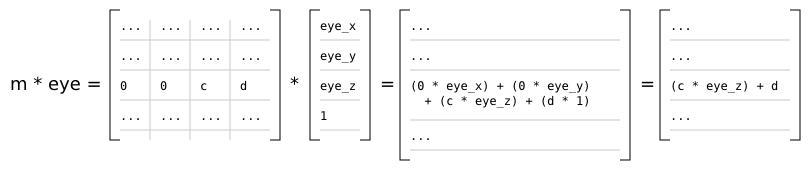

In the r2 package, the observer produces a 4x4 view matrix. Multiplying the view matrix with any given world space position yields a position in eye space. In practice, the view matrix v and the current object's model matrix m are concatenated (multiplied) to produce a model-view matrix mv = v * m [5], and mv is then passed directly to the renderer's vertex shaders to transform the current object's vertices [6].

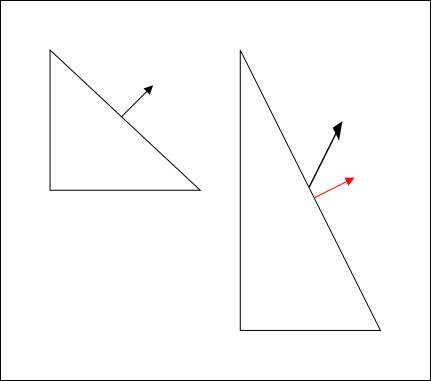

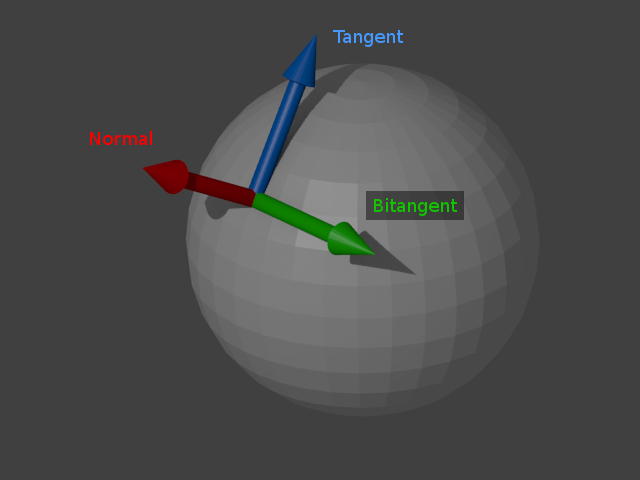

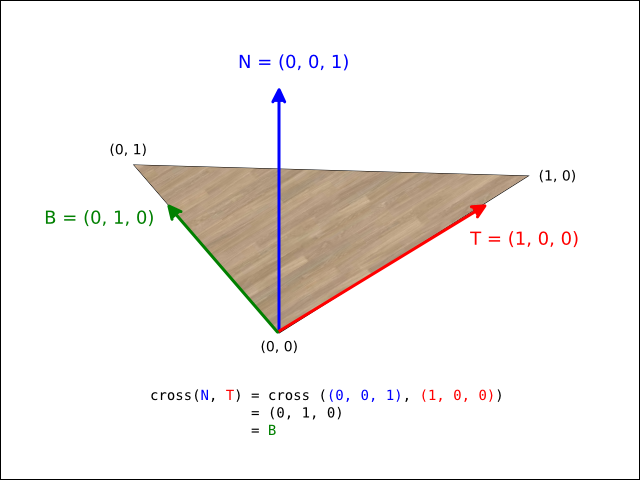

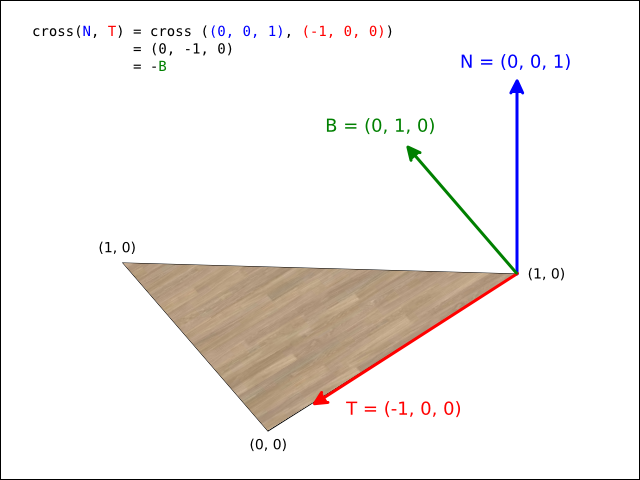

Additionally, as the r2 package does all lighting in eye space, it's necessary to transform the object space normal vectors given in mesh data to eye space. However, the usual model-view matrix will almost certainly contain some sort of translational component and possibly a scaling component. Normal vectors are not supposed to be translated; they represent directions! A non-uniform scale applied to an object will also deform the normal vectors, making them non-perpendicular to the surface they're associated with:

With the scaled triangle on the right, the normal vector is now not perpendicular to the surface (in addition to no longer being of unit length). The red vector indicates what the surface normal should be.

Therefore it's necessary to derive another 3x3 matrix known as the normal matrix from the model-view matrix that contains just the rotational component of the original matrix. The full derivation of this matrix is given in Mathematics for 3D Game Programming and Computer Graphics, Third Edition [4]. Briefly, the normal matrix is equal to the inverse transpose of the top left 3x3 elements of an arbitrary 4x4 model-view matrix.

In other rendering systems, eye space is sometimes referred to as camera space, or view space.

In the r2 package, eye space is represented by the R2SpaceEyeType.

Clip Space

Clip space is a homogeneous coordinate system in which OpenGL performs clipping of primitives (such as triangles). In OpenGL, clip space is effectively a left-handed coordinate system by default [7]. Intuitively, coordinates in eye space are transformed with a projection (normally either an orthographic or perspective projection) such that all vertices are projected into a homogeneous unit cube placed at the origin - clip space - resulting in four-dimensional (x, y, z, w) positions. Positions that end up outside of the cube are clipped (discarded) by dedicated clipping hardware, usually producing more triangles as a result.

A projection effectively determines how objects in the three-dimensional scene are projected onto the two-dimensional viewing plane (a computer screen, in most cases) . A perspective projection transforms vertices such that objects that are further away from the viewing plane appear to be smaller than objects that are close to it, while an orthographic projection preserves the perceived sizes of objects regardless of their distance from the viewing plane.

Because eye space is a right-handed coordinate system by convention, but by default clip space is left-handed, the projection matrix used will invert the sign of the z component of any given point.

In the r2 package, the observer produces a 4x4 projection matrix. The projection matrix is passed, along with the model-view matrix, to the renderer's vertex shaders. As is normal in OpenGL, the vertex shader produces clip space coordinates which are then used by the hardware rasterizer to produce color fragments onscreen.

In the r2 package, clip space is represented by the R2SpaceClipType.

Normalized-Device Space

Normalized-device space is, by default, a left-handed [8] coordinate space in which clip space coordinates have been divided by their own w component (discarding the resulting w = 1 component in the process), yielding three dimensional coordinates. The range of values in the resulting coordinates are effectively normalized by the division to fall within the ranges [(-1, -1, -1), (1, 1, 1)] [9]. The coordinate space represents a simplifying intermediate step between having clip space coordinates and getting something projected into a two-dimensional image (screen space) for viewing.

The r2 package does not directly use or manipulate values in normalized-device space; it is mentioned here for completeness.

Screen Space

Screen space is, by default, a left-handed coordinate system representing the screen (or window) that is displaying the actual results of rendering. If the screen is of width w and height h, and the current depth range of the window is [n, f], then the range of values in screen space coordinates runs from [(0, 0, n), (w, h, f)]. The origin (0, 0, 0) is assumed to be at the bottom-left corner.

The depth range is actually a configurable value, but the r2 package keeps the OpenGL default. From the glDepthRange function manual page:

After clipping and division by w, depth coordinates range from -1 to 1, corresponding to the near and far clipping planes. glDepthRange specifies a linear mapping of the normalized depth coordinates in this range to window depth coordinates. Regardless of the actual depth buffer implementation, window coordinate depth values are treated as though they range from 0 through 1 (like color components). Thus, the values accepted by glDepthRange are both clamped to this range before they are accepted. The setting of (0,1) maps the near plane to 0 and the far plane to 1. With this mapping, the depth buffer range is fully utilized.

As OpenGL, by default, specifies a depth range of [0, 1], the positive Z axis points away from the observer and so the coordinate system is left handed.

Meshes

Overview

A mesh is a collection of vertices that make up the triangles that define a polyhedral object, allocated on the GPU upon which the renderer is executing. In practical terms, a mesh is a pair (a, i), where a is an OpenGL vertex buffer object consisting of vertices, an i is an OpenGL element buffer object consisting of indices that describe how to draw the mesh as a series of triangles.

The contents of a are mutable, but mesh references are considered to be immutable.

Attributes

A mesh consists of vertices. A vertex can be considered to be a value of a record type, with the fields of the record referred to as the attributes of the vertex. In the r2 package, an array buffer containing vertex data is specified using the array buffer types from jcanephora. The jcanephora package allows programmers to specify the exact types of array buffers, allows for the full inspection of type information at runtime, including the ability to reference attributes by name, and allows for type-safe modification of the contents of array buffers using an efficient cursor interface.

Each attribute within an array buffer is assigned a numeric attribute index. A numeric index is an arbitrary number between (including) 0 and some OpenGL implementation-defined upper limit. On modern graphics hardware, OpenGL allows for at least 16 numeric attributes. The indices are used to create an association between fields in the array buffer and shader inputs. For the sake of sanity and consistency, it is the responsibility of rendering systems using OpenGL to establish conventions for the assignment of numeric attribute indices in shaders and array buffers [12]. For example, many systems state that attribute 0 should be of type vec4 and should represent vertex positions. Shaders simply assume that data arriving on attribute input 0 represents position data, and programmers are expected to create meshes where attribute 0 points to the field within the array that contains position data.

The r2 package uses the following conventions everywhere:

| Index | Type | Description |

|---|---|---|

| 0 | vec3 | The object-space position of the vertex |

| 1 | vec2 | The UV coordinates of the vertex |

| 2 | vec3 | The object-space normal vector of the vertex |

| 3 | vec4 | The tangent vector of the vertex |

Batched instances are expected to use the following additional conventions:

Types

In the r2 package, the given attribute conventions are specified by the R2AttributeConventions type.

Transforms

Overview

The ultimate purpose of a transform is to produce one or more matrices that can be combined with other matrices and then finally passed to a shader. The shader uses these matrices to transform vertices and normal vectors during the rendering of objects.

A transform is effectively responsible for producing a model matrix that transforms positions in object space to world space.

Instances

Overview

An instance is a renderable object. There are several types of instances available in the r2 package: single, batched, and billboarded.

Single

A single instance is the simplest type of instance available in the r2 package. A single instance is simply a pair (m, t), where m is a mesh, and t is a transform capable of transforming the object space coordinates of the vertices contained within m to world space.

Batched

A batched instance represents a group of (identical) renderable objects. The reason for the existence of batched instances is simple efficiency: On modern rendering hardware, rendering n single instances means submitting n draw calls to the GPU. As n becomes increasingly large, the overhead of the large number of draw calls becomes a bottleneck for rendering performance. A batched instance of size m allows for rendering a given mesh m times in a single draw call.

A batched instance of size n is a 3-tuple (m, b, t), where m is a mesh, b is a buffer of n 4x4 matrices allocated on the GPU, and t is an array of n transforms allocated on the CPU. For each i where 0 <= i < n, b[i] is the 4x4 model matrix produced from t[i]. The contents of b are typically recalculated and uploaded to the GPU once per rendering frame.

Billboarded

A billboarded instance is a further specialization of batched instances. Billboarding is the name given to a rendering technique where instead of rendering full 3D objects, simple 2D images of those objects are rendered instead using flat rectangles that are arranged such that they are always facing directly towards the observer.

A billboarded instance of size n is a pair (m, p), where m is a mesh [13], and p is a buffer of n world space positions allocated on the GPU.

Render Targets

Shaders

Interface And Calling Protocol

Every shader in the r2 package has an associated Java class. Each class may implement one of the interfaces that are themselves subtypes of the R2ShaderType interface. Each class is responsible for uploading parameters to the actual compiled GLSL shader on the GPU. Certain parameters, such as view matrices, the current size of the screen, etc, are only calculated during each rendering pass and therefore will be supplied to the shader classes at more or less the last possible moment. The calculated parameters are supplied via methods defined on the R2ShaderType subinterfaces, and implementations of the subinterfaces can rely on the methods being called in a very strict predefined order. For example, instances of type R2ShaderInstanceSingleUsableType will receive calls in exactly this order:

- First, onActivate will be called. It is the class's responsibility to activate the GLSL shader at this point.

- Then onReceiveViewValues will be called when the current view-specific values have been calculated.

- Now, for each material m that uses the current shader:

- onReceiveMaterialValues will be called once.

- For each instance i using that uses a material that uses the current shader, onReceiveInstanceTransformValues will be called, followed by onValidate.

The final onValidate call allows the shader to check that all of the required method calls have actually been made by the caller, and the method is permitted to throw R2ExceptionShaderValidationFailed if the caller makes a mistake at any point. The implicit promise is that callers will call all of the methods in the correct order and the correct number of times, and shaders are allowed to loudly complain if and when this does not happen.

Of course, actually requiring the programmer to manually implement all of the above for each new shader would be unreasonable and would just become a new source of bugs. The r2 provides abstract shader implementations to perform the run-time checks listed above without forcing the programmer to implement them all manually. The R2AbstractInstanceShaderSingle type, for example, implements the R2ShaderInstanceSingleUsableType interface and provides a few abstract methods that the programmer implements in order to upload parameters to the GPU. The abstract implementation enforces the calling protocol.

The calling protocol described both ensures that all shader parameters will be set and that the renderers themselves are insulated from the interfaces of actual GLSL shaders. Failing to set parameters, attempting to set parameters that no longer exist, or passing values of the wrong types to GLSL shaders is a common source of bugs in OpenGL programs and almost always results in either silent failure or corrupted visuals. The r2 package takes care to ensure that mistakes of that type are difficult to make.

Shader Modules

Although the GLSL shading language is anti-modular in the sense that it has one large namespace, the r2 package attempts to relieve some of the pain of shader management by delegating to the sombrero package. The sombrero package provides a preprocessor for shader code, allowing shader code to make use of #include directives. It also provides a system for publishing and importing modules full of shaders based internally on the standard Java ServiceLoader API. This allows users that want to write their own shaders to import much of the re-usable shader code from the r2 package into their own shaders without needing to do anything more than have the correct shader jar on the Java classpath [14].

As a simple example, if the user writing custom shaders wants to take advantage of the bilinear interpolation functions used in many r2 shaders, the following #include is sufficient:

#include <com.io7m.r2.shaders.core/R2Bilinear.h> vec3 x = R2_bilinearInterpolate3(...);

The text com.io7m.r2.shaders.core is considered to be the module name, and the R2Bilinear.h name refers to that file within the module. The sombrero resolver maps the request to a concrete resource on the filesystem or in a jar file and returns the content for inclusion.

The r2 package also provides an interface, the R2ShaderPreprocessingEnvironmentType type, that allows constants to be set that will be exposed to shaders upon being preprocessed. Each shader stores an immutable snapshot of the environment used to preprocess it after successful compilation.

Shaders: Instance

Overview

An instance shader is a shader used to render the surfaces of instances. Depending on the context, this may mean rendering the surface attributes of the instances into a geometry buffer, forward rendering the instance directly to the screen (or

other image) , rendering only the depth of the surface, or perhaps not producing any output at all as shaders used simply for stencilling are permitted to do. Instance shaders are most often exposed to the programmer via materials.

Materials

A material is a pair (s, i, p) where p is a value of type m that represents a set of shader parameters, s is a shader that takes parameters of type m, and i is a unique identifier for the material. Materials primarily exist to facilitate batching: By assigning each material a unique identifier, the system can assume that two materials are the same if they have the same identifier, without needing to perform a relatively expensive structural equality comparison between the shaders and shader parameters.

Provided Shaders

Writing shaders is difficult. The programmer must be aware of an endless series of pitfalls inherent in the OpenGL API and the shading language. While the r2 package does allow users to write their own shaders, the intention has always been to provide a small set of general purpose shaders that cover the majority of the use cases in modern games and simulations. The instance shaders provided by default are:

| Shader | Description |

|---|---|

| R2SurfaceShaderBasicSingle | Basic textured surface with normal mapping, specular mapping, emission mapping, and conditional discarding based on alpha. |

| R2SurfaceShaderBasicReflectiveSingle | Basic textured surface with pseudo reflections from a cube map, normal mapping, specular mapping, emission mapping, and conditional discarding based on alpha. |

Types

In the r2 package, materials are instances of R2MaterialType. Geometry renderers primarily consume instances that are associated with values of the R2MaterialOpaqueSingleType and R2MaterialOpaqueBatchedType types. Instance shaders are instances of the R2ShaderInstanceSingleType and R2ShaderInstanceBatchedType types.

Shaders: Light

Overview

A light shader is a shader used to render the contributions of a light source. Light shaders in the r2 package are only used within the context of deferred rendering.

Stencils

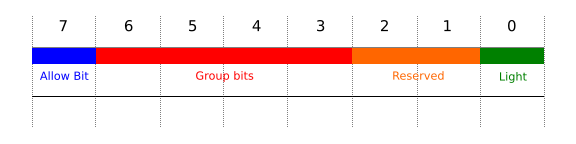

Reserved Bits

The current stencil buffer layout used by the r2 package is as follows:

Bit 0 is used for light clip volumes.

Bits 1-2 are reserved for future use.

Allow Bit

The r2 package reserves a single bit in the current stencil buffer, known as the allow bit. In all subsequent rendering operations, a pixel may only be written if the corresponding allow bit in the stencil buffer is true.

The stencil buffer allow bits are populated via the use of a stencil renderer. The user specifies a series of instances whose only purpose is to either enable or disable the allow bit for each rendered pixel. Users may specify whether instances are positive or negative. Positive instances set the allow bit to true for each overlapped pixel, and negative instances set the allow bit to false for each overlapped pixel.

Lighting

Overview

The following sections of documentation attempt to describe the theory and implementation of lighting in the r2 package. All lighting in the package is dynamic- there is no support for precomputed lighting and all contributions from lights are recalculated every time a scene is rendered. Lighting is configured by adding instances of R2LightType to a scene.

Diffuse/Specular Terms

The light applied to a surface by a given light is divided into diffuse and specular terms [15]. The actual light applied to a surface is dependent upon the properties of the surface. Conceptually, the diffuse and specular terms are multiplied by the final color of the surface and summed. In practice, the materials applied to surfaces have control over how light is actually applied to the surface. For example, materials may include a specular map which is used to manipulate the specular term as it is applied to the surface. Additionally, if a light supports attenuation, then the diffuse and specular terms are scaled by the attenuation factor prior to being applied.

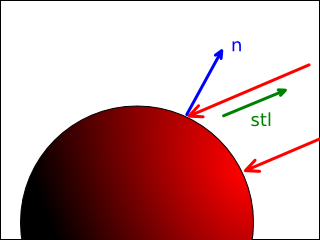

The diffuse term is modelled by Lambertian reflectance. Specifically, the amount of diffuse light reflected from a surface is given by diffuse in LightDiffuse.hs:

module LightDiffuse where

import qualified Color3

import qualified Direction

import qualified Normal

import qualified Spaces

import qualified Vector3f

diffuse :: Direction.T Spaces.Eye -> Normal.T -> Color3.T -> Float -> Vector3f.T

diffuse stl n light_color light_intensity =

let

factor = max 0.0 (Vector3f.dot3 stl n)

light_scaled = Vector3f.scale light_color light_intensity

in

Vector3f.scale light_scaled factorWhere stl is a unit length direction vector from the surface to the light source, n is the surface normal vector, light_color is the light color, and light_intensity is the light intensity. Informally, the algorithm determines how much diffuse light should be reflected from a surface based on how directly that surface points towards the light. When stl == n, Vector3f.dot3 stl n == 1.0, and therefore the light is reflected exactly as received. When stl is perpendicular to n (such that Vector3f.dot3 stl n == 0.0 ), no light is reflected at all. If the two directions are greater than 90° perpendicular, the dot product is negative, but the algorithm clamps negative values to 0.0 so the effect is the same.

The specular term is modelled either by Phong or Blinn-Phong reflection. The r2 package provides light shaders that provide both Phong and Blinn-Phong specular lighting and the user may freely pick between implementations. For the sake of simplicity, the rest of this documentation assumes that Blinn-Phong shading is being used. Specifically, the amount of specular light reflected from a surface is given by specularBlinnPhong in LightSpecular.hs:

module LightSpecular where

import qualified Color3

import qualified Direction

import qualified Normal

import qualified Reflection

import qualified Spaces

import qualified Specular

import qualified Vector3f

specularPhong :: Direction.T Spaces.Eye -> Direction.T Spaces.Eye -> Normal.T -> Color3.T -> Float -> Specular.T -> Vector3f.T

specularPhong stl view n light_color light_intensity (Specular.S surface_spec surface_exponent) =

let

reflection = Reflection.reflection view n

factor = (max 0.0 (Vector3f.dot3 reflection stl)) ** surface_exponent

light_raw = Vector3f.scale light_color light_intensity

light_scaled = Vector3f.scale light_raw factor

in

Vector3f.mult3 light_scaled surface_spec

specularBlinnPhong :: Direction.T Spaces.Eye -> Direction.T Spaces.Eye -> Normal.T -> Color3.T -> Float -> Specular.T -> Vector3f.T

specularBlinnPhong stl view n light_color light_intensity (Specular.S surface_spec surface_exponent) =

let

reflection = Reflection.reflection view n

factor = (max 0.0 (Vector3f.dot3 reflection stl)) ** surface_exponent

light_raw = Vector3f.scale light_color light_intensity

light_scaled = Vector3f.scale light_raw factor

in

Vector3f.mult3 light_scaled surface_specWhere stl is a unit length direction vector from the surface to the light source, view is a unit length direction vector from the observer to the surface, n is the surface normal vector, light_color is the light color, light_intensity is the light intensity, surface_exponent is the specular exponent defined by the surface, and surface_spec is the surface specularity factor.

The specular exponent is a value, ordinarily in the range [0, 255], that controls how sharp the specular highlights appear on the surface. The exponent is a property of the surface, as opposed to being a property of the light. Low specular exponents result in soft and widely dispersed specular highlights (giving the appearance of a rough surface), while high specular exponents result in hard and focused highlights (giving the appearance of a polished surface). As an example, three models lit with progressively lower specular exponents from left to right ( 128, 32, and 8, respectively):

Attenuation

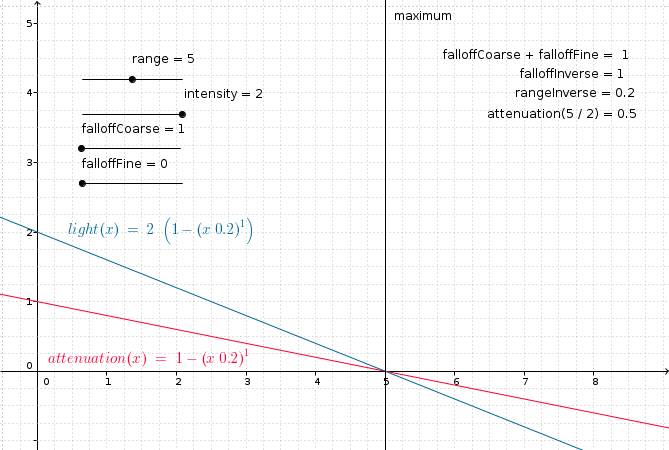

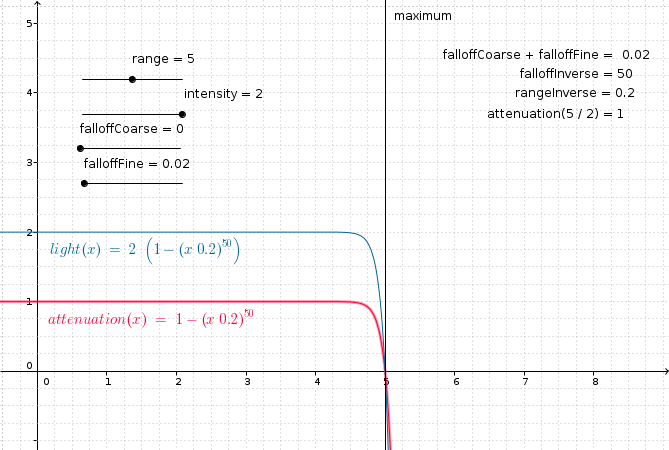

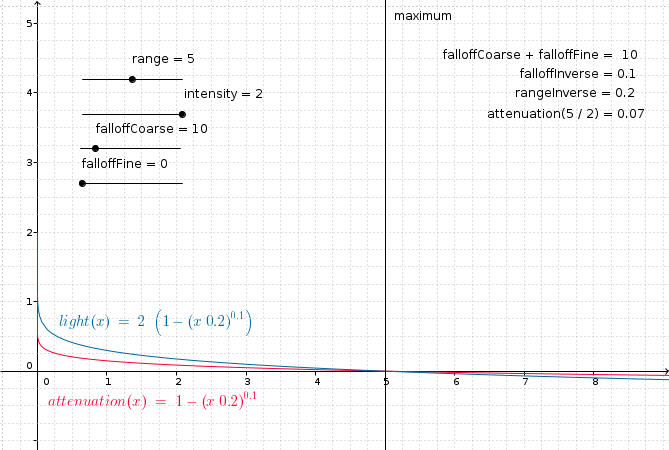

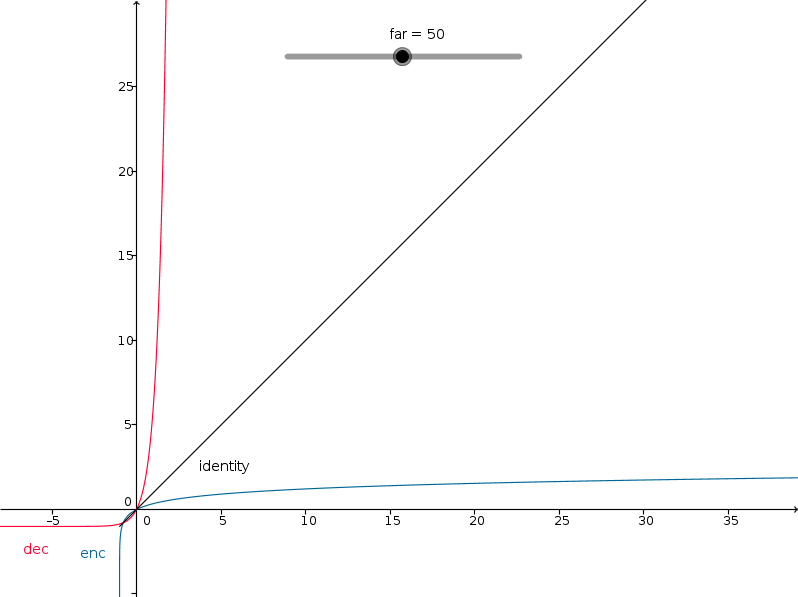

Attenuation is the property of the influence of a given light on a surface in inverse proportion to the distance from the light to the surface. In other words, for lights that support attenuation, the further a surface is from a light source, the less that surface will appear to be lit by the light. For light types that support attenuation, an attenuation factor is calculated based on a given inverse_maximum_range (where the maximum_range is a light-type specific positive value that represents the maximum possible range of influence for the light), a configurable inverse falloff value, and the current distance between the surface being lit and the light source. The attenuation factor is a value in the range [0.0, 1.0], with 1.0 meaning "no attenuation" and 0.0 meaning "maximum attenuation". The resulting attenuation factor is multiplied by the raw unattenuated light values produced for the light in order to produce the illusion of distance attenuation. Specifically:

module Attenuation where attenuation_from_inverses :: Float -> Float -> Float -> Float attenuation_from_inverses inverse_maximum_range inverse_falloff distance = max 0.0 (1.0 - (distance * inverse_maximum_range) ** inverse_falloff) attenuation :: Float -> Float -> Float -> Float attenuation maximum_range falloff distance = attenuation_from_inverses (1.0 / maximum_range) (1.0 / falloff) distance

Given the above definitions, a number of observations can be made.

If maximum_range == 0, then the inverse range is undefined, and therefore the results of lighting are undefined. The r2 package handles this case by raising an exception when the light is created.

If falloff == 0, then the inverse falloff is undefined, and therefore the results of lighting are undefined. The r2 package handles this case by raising an exception when the light is created.

As falloff decreases towards 0.0, then the attenuation curve remains at 1.0 for increasingly higher distance values before falling sharply to 0.0:

As falloff increases away from 0.0, then the attenuation curve decreases more for lower distance values:

Lighting: Directional

Overview

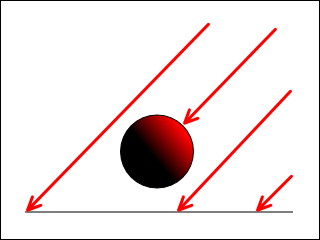

Directional lighting is the most trivial form of lighting provided by the r2 package. A directional light is a light that emits parallel rays of light in a given eye space direction. It has a color and an intensity, but does not have an origin and therefore is not attenuated over distance. It does not cause objects to cast shadows.

Application

The final light applied to the surface is given by directional (Directional.hs), where sr, sg, sb are the red, green, and blue channels, respectively, of the surface being lit. Note that the surface-to-light vector stl is simply the negation of the light direction.

module Directional where

import qualified Color4

import qualified Direction

import qualified LightDirectional

import qualified LightDiffuse

import qualified LightSpecular

import qualified Normal

import qualified Position3

import qualified Spaces

import qualified Specular

import qualified Vector3f

import qualified Vector4f

directional :: Direction.T Spaces.Eye -> Normal.T -> Position3.T Spaces.Eye -> LightDirectional.T -> Specular.T -> Color4.T -> Vector3f.T

directional view n position light specular (Vector4f.V4 sr sg sb _) =

let

stl = Vector3f.normalize (Vector3f.negation position)

light_color = LightDirectional.color light

light_intensity = LightDirectional.intensity light

light_d = LightDiffuse.diffuse stl n light_color light_intensity

light_s = LightSpecular.specularBlinnPhong stl view n light_color light_intensity specular

lit_d = Vector3f.mult3 (Vector3f.V3 sr sg sb) light_d

lit_s = Vector3f.add3 lit_d light_s

in

lit_s

Types

Directional lights are represented in the r2 package by the R2LightDirectionalScreenSingle type.

Lighting: Spherical

Overview

A spherical light in the r2 package is a light that emits rays of light in all directions from a given origin specified in eye space up to a given maximum radius.

The term spherical comes from the fact that the light has a defined radius. Most rendering systems instead use point lights that specify multiple attenuation constants to control how light is attenuated over distance. The problem with this approach is that it requires solving a quadratic equation to determine a minimum bounding sphere that can contain the light. Essentially, the programmer/artist is forced to determine "at which radius does the contribution from this light effectively reach zero?". With spherical lights, the maximum radius is declared up front, and a single falloff value is used to determine the attenuation curve within that radius. This makes spherical lights more intuitive to use: The programmer/artist simply places a sphere within the scene and knows exactly from the radius which objects are lit by it. It also means that bounding light volumes can be trivially constructed from unit spheres by simply scaling those spheres by the light radius, when performing deferred rendering.

Application

The final light applied to the surface is given by spherical (Spherical.hs), where sr, sg, sb are the red, green, and blue channels, respectively, of the surface being lit. The surface-to-light vector stl is calculated by normalizing the negation of the difference between the the current eye space surface_position and the eye space origin of the light.

module Spherical where

import qualified Attenuation

import qualified Color4

import qualified Direction

import qualified LightDiffuse

import qualified LightSpecular

import qualified LightSpherical

import qualified Normal

import qualified Position3

import qualified Specular

import qualified Spaces

import qualified Vector3f

import qualified Vector4f

spherical :: Direction.T Spaces.Eye -> Normal.T -> Position3.T Spaces.Eye -> LightSpherical.T -> Specular.T -> Color4.T -> Vector3f.T

spherical view n surface_position light specular (Vector4f.V4 sr sg sb _) =

let

position_diff = Position3.sub3 surface_position (LightSpherical.origin light)

stl = Vector3f.normalize (Vector3f.negation position_diff)

distance = Vector3f.magnitude (position_diff)

attenuation = Attenuation.attenuation (LightSpherical.radius light) (LightSpherical.falloff light) distance

light_color = LightSpherical.color light

light_intensity = LightSpherical.intensity light

light_d = LightDiffuse.diffuse stl n light_color light_intensity

light_s = LightSpecular.specularBlinnPhong stl view n light_color light_intensity specular

light_da = Vector3f.scale light_d attenuation

light_sa = Vector3f.scale light_s attenuation

lit_d = Vector3f.mult3 (Vector3f.V3 sr sg sb) light_da

lit_s = Vector3f.add3 lit_d light_sa

in

lit_s

Lighting: Projective

- 2.15.1. Overview

- 2.15.2. Algorithm

- 2.15.3. Back projection

- 2.15.4. Clamping

- 2.15.5. Attenuation

- 2.15.6. Application

Overview

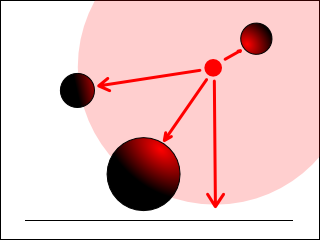

A projective light in the r2 package is a light that projects a texture onto the scene from a given origin specified in eye space up to a given maximum radius. Projective lights are the only types of lights in the r2 package that are able to project shadows.

Algorithm

At a basic level, a projective light performs the same operations that occur when an ordinary 3D position is projected onto the screen during rendering. During normal rendering, a point p given in world space is transformed to eye space given the current camera's view matrix, and is then transformed to clip space using the current camera's projection matrix. During rendering of a scene lit by a projective light, a given point q in the scene is transformed back to world space given the current camera's inverse view matrix, and is then transformed to eye space from the point of view of the light (subsequently referred to as light eye space) using the light's view matrix. Finally, q is transformed to clip space from the point of view of the light (subsequently referred to as light clip space) using the light's projection matrix. It should be noted (in order to indicate that there is nothing unusual about the light's view or projection matrices) that if the camera and light have the same position, orientation, scale, and projection, then the resulting transformed values of q and p are identical. The resulting transformed value of q is mapped from the range [(-1, -1, -1), (1, 1, 1)] to [(0, 0, 0), (1, 1, 1)], and the resulting coordinates are used to retrieve a texel from the 2D texture associated with the light.

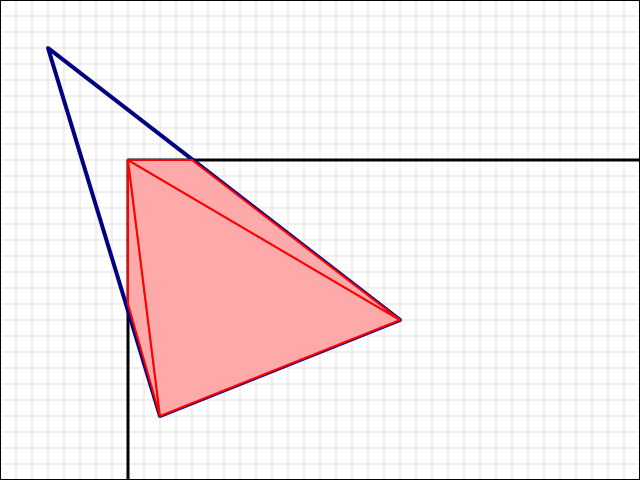

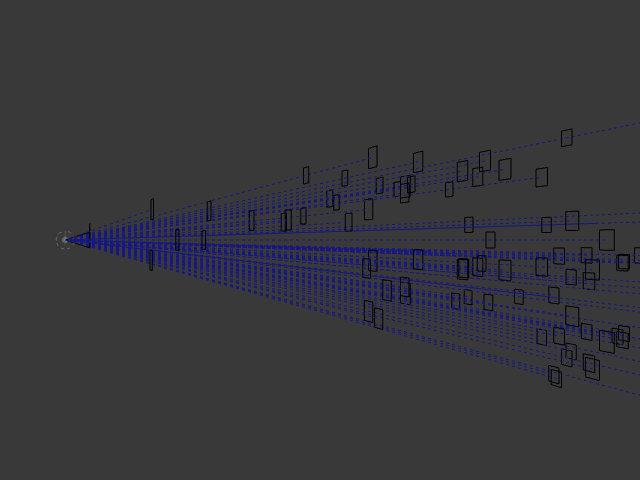

Intuitively, an ordinary perspective projection will cause the light to appear to take the shape of a frustum:

There are two issues with the projective lighting algorithm that also have to be solved: back projection and clamping.

Back projection

The algorithm described above will produce a so-called dual or back projection. In other words, the texture will be projected along the view direction of the camera, but will also be projected along the negative view direction [17]. The visual result is that it appears that there are two projective lights in the scene, oriented in opposite directions. As mentioned previously, given the typical projection matrix, the w component of a given clip space position is the negation of the eye space z component. Because it is assumed that the observer is looking towards the negative z direction, all positions that are in front of the observer must have positive w components. Therefore, if w is negative, then the position is behind the observer. The standard fix for this problem is to check to see if the w component of the light-clip space coordinate is negative, and simply return a pure black color (indicating no light contribution) rather than sampling from the projected texture.

Clamping

The algorithm described above takes an arbitrary point in the scene and projects it from the point of view of the light. There is no guarantee that the point actually falls within the light's view frustum (although this is mitigated slightly by the r2 package's use of light volumes for deferred rendering), and therefore the calculated texture coordinates used to sample from the projected texture are not guaranteed to be in the range [(0, 0), (1, 1)]. In order to get the intended visual effect, the texture used must be set to clamp-to-edge and have black pixels on all of the edges of the texture image, or clamp-to-border with a black border color. Failing to do this can result in strange visual anomalies, as the texture will be unexpectedly repeated or smeared across the area outside of the intersection between the light volume and the receiving surface:

The r2 package will raise an exception if a non-clamped texture is assigned to a projective light.

Application

The final light applied to the surface is given by projective in Projective.hs, where sr, sg, sb are the red, green, and blue channels, respectively, of the surface being lit. The surface-to-light vector stl is calculated by normalizing the negation of the difference between the the current eye space surface_position and the eye space origin of the light.

module Projective where

import qualified Attenuation

import qualified Color3

import qualified Color4

import qualified Direction

import qualified LightDiffuse

import qualified LightSpecular

import qualified LightProjective

import qualified Normal

import qualified Position3

import qualified Specular

import qualified Spaces

import qualified Vector3f

import qualified Vector4f

projective :: Direction.T Spaces.Eye -> Normal.T -> Position3.T Spaces.Eye -> LightProjective.T -> Specular.T -> Float -> Color3.T -> Color4.T -> Vector3f.T

projective view n surface_position light specular shadow texture (Vector4f.V4 sr sg sb _) =

let

position_diff = Position3.sub3 surface_position (LightProjective.origin light)

stl = Vector3f.normalize (Vector3f.negation position_diff)

distance = Vector3f.magnitude (position_diff)

attenuation_raw = Attenuation.attenuation (LightProjective.radius light) (LightProjective.falloff light) distance

attenuation = attenuation_raw * shadow

light_color = Vector3f.mult3 (LightProjective.color light) texture

light_intensity = LightProjective.intensity light

light_d = LightDiffuse.diffuse stl n light_color light_intensity

light_s = LightSpecular.specularBlinnPhong stl view n light_color light_intensity specular

light_da = Vector3f.scale light_d attenuation

light_sa = Vector3f.scale light_s attenuation

lit_d = Vector3f.mult3 (Vector3f.V3 sr sg sb) light_da

lit_s = Vector3f.add3 lit_d light_sa

in

lit_s

The given shadow factor is a value in the range [0, 1], where 0 indicates that the lit point is fully in shadow for the current light, and 1 indicates that the lit point is not in shadow. This is calculated for variance shadows and is assumed to be 1 for lights without shadows. As can be seen, a value of 0 has the effect of fully attenuating the light.

The color denoted by texture is assumed to have been sampled from the projected texture. Assuming the eye space position being shaded p, the matrix to get from eye space to light-clip space is given by The final light applied to the surface is given by projective_matrix in ProjectiveMatrix.hs:

module ProjectiveMatrix where

import qualified Matrix4f

projective_matrix :: Matrix4f.T -> Matrix4f.T -> Matrix4f.T -> Matrix4f.T

projective_matrix camera_view light_view light_projection =

case Matrix4f.inverse camera_view of

Just cv -> Matrix4f.mult (Matrix4f.mult light_projection light_view) cv

Nothing -> undefined -- A view matrix is always invertible

Shadows

Overview

Because the r2 package implements local illumination, it is necessary to associate shadows with those light sources capable of projecting them (currently only projective lights). The r2 package currently only supports variance shadow mapping. So-called mapped shadows allow efficient per-pixel shadows to be calculated with varying degrees of visual quality.

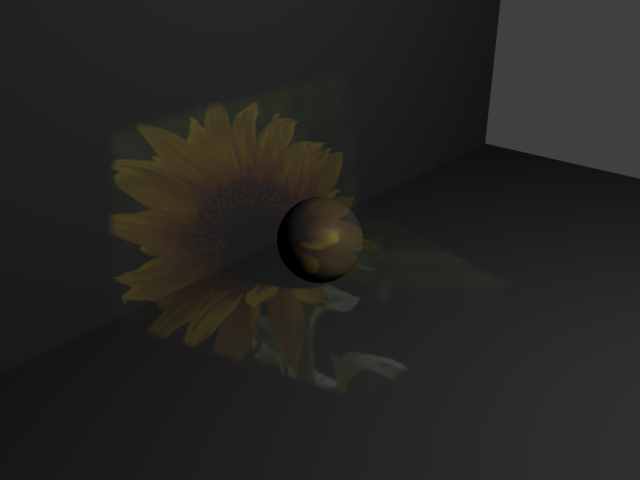

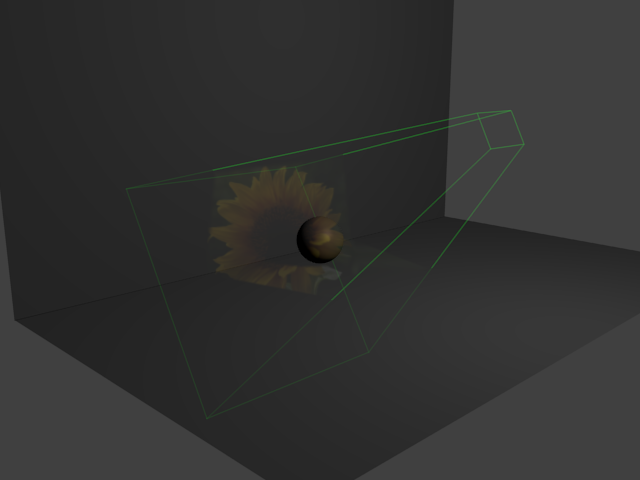

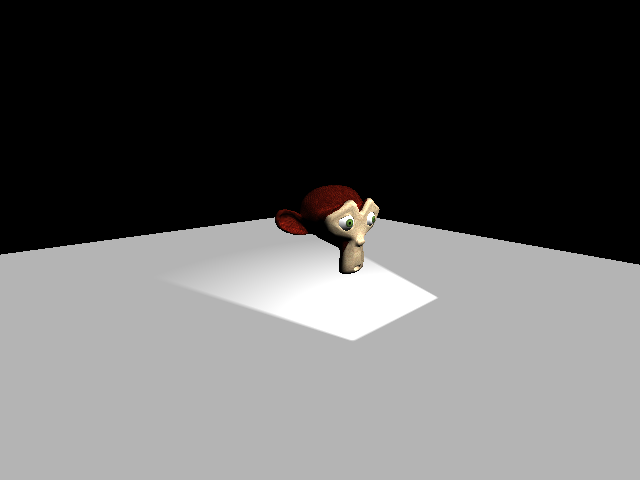

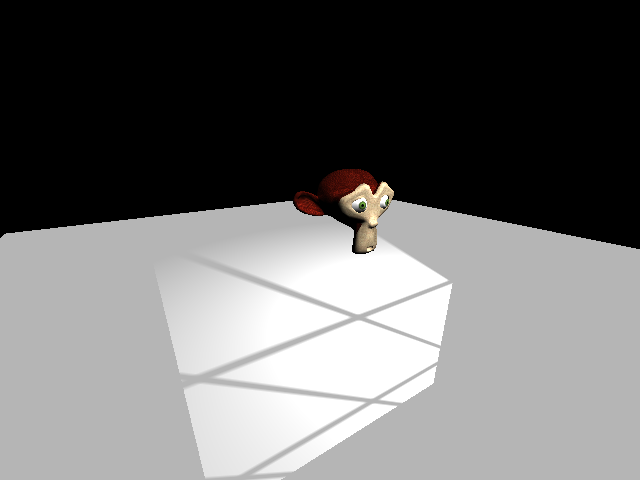

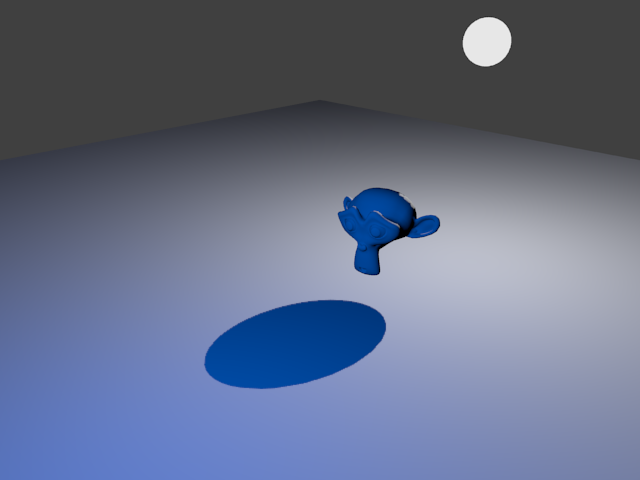

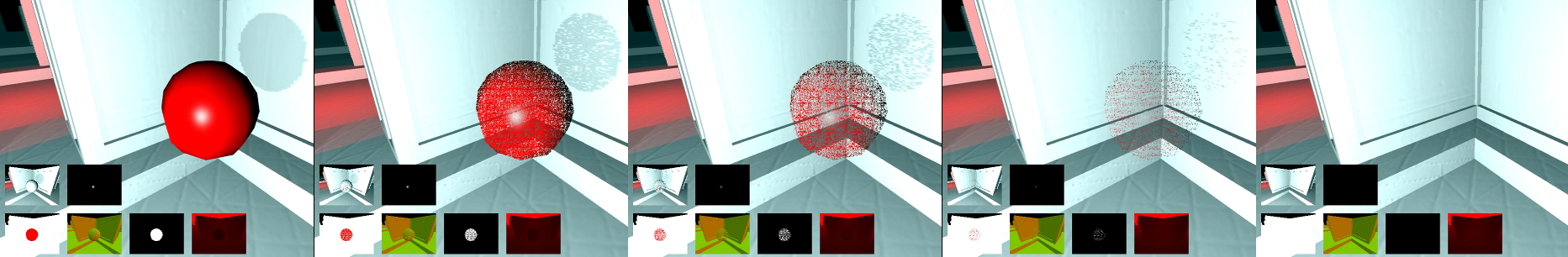

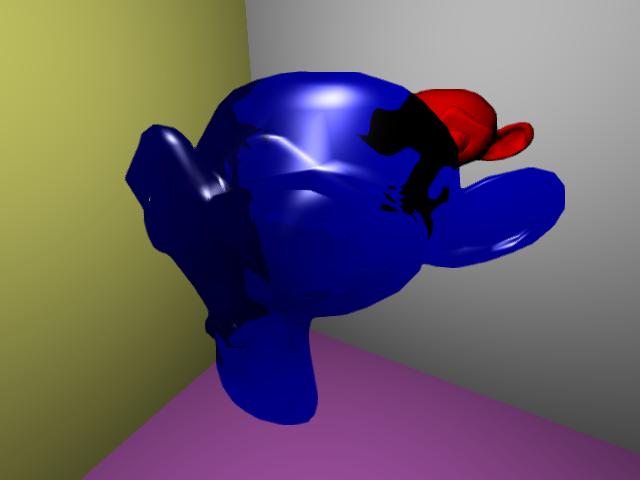

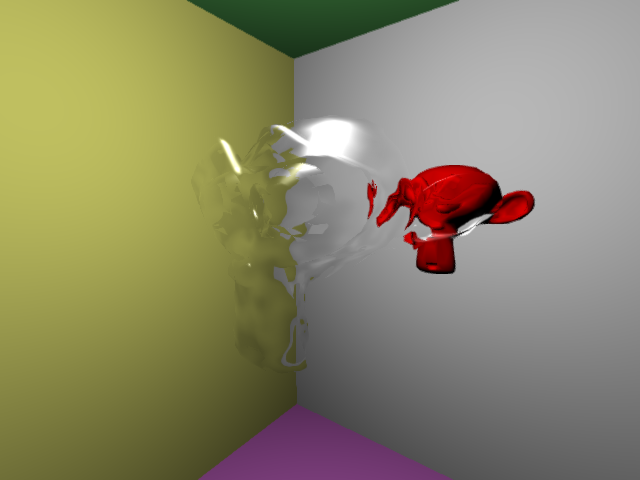

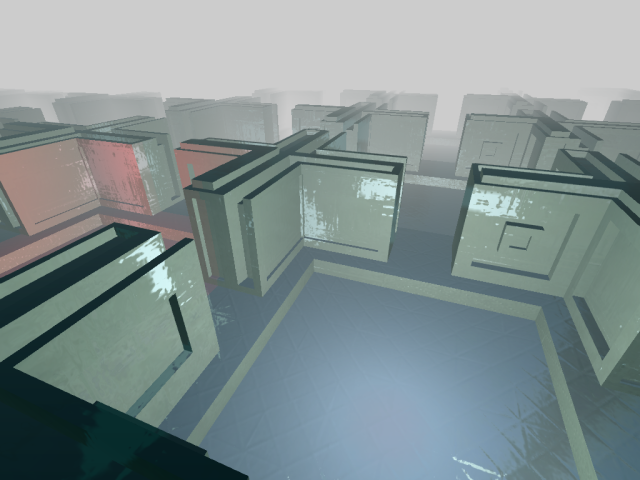

Shadow Geometry

Because the system requires the programmer to explicitly and separately state that an opaque instance is visible in the scene, and that an opaque instance is casting a shadow, it becomes possible to effectively specify different shadow geometry for a given instance. As an example, a very complex and high resolution mesh may still have the silhouette of a simple sphere, and therefore the user can separately add the high resolution mesh to a scene as a visible instance, but add a low resolution version of the mesh as an invisible shadow-casting instance with the same transform. As a rather extreme example, assuming a high resolution mesh m0 added to the scene as both a visible instance and a shadow caster:

A low resolution mesh m1 added to the scene as both a visible instance and shadow caster:

Now, with m1 added as only a shadow caster, and m0 added as only a visible instance:

Using lower resolution geometry for shadow casters can lead to efficiency gains on systems where vertex processing is expensive.

Shadows: Variance Mapping

Overview

Variance shadow mapping is a technique that can give attractive soft-edged shadows. Using the same view and projection matrices used to apply projective lights, a depth-variance image of the current scene is rendered, and those stored depth distribution values are used to determine the probability that a given point in the scene is in shadow with respect to the current light.

The algorithm implemented in the r2 package is described in GPU Gems 3, which is a set of improvements to the original variance shadow mapping algorithm by William Donnelly and Andrew Lauritzen. The r2 package implements all of the improvements to the algorithm except summed area tables. The package also provides optional box blurring of shadows as described in the chapter.

Algorithm

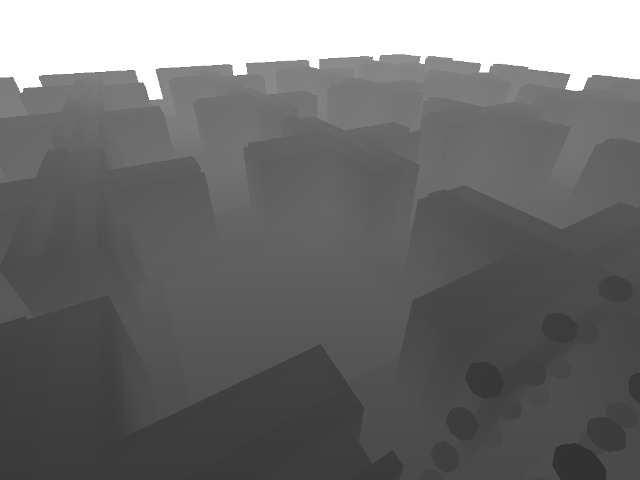

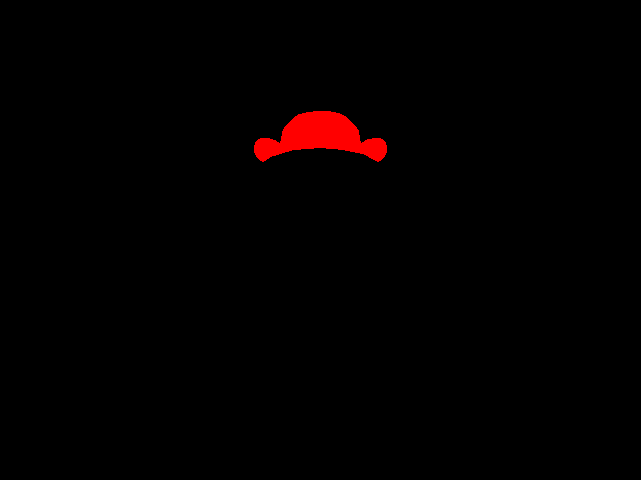

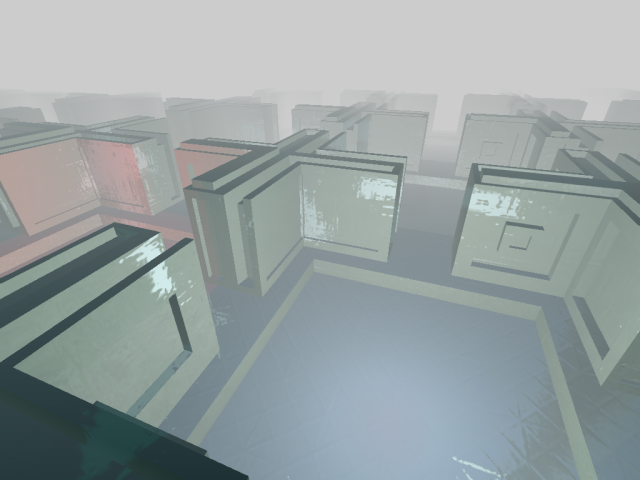

Prior to actually rendering a scene, shadow maps are generated for all shadow-projecting lights in the scene. A shadow map for variance shadow mapping, for a light k, is a two-component red/green image of all of the shadow casters associated with k in the visible set. The image is produced by rendering the instances from the point of view of k. The red channel of each pixel in the image represents the logarithmic depth of the closest surface at that pixel, and the green channel represents the depth squared (literally depth * depth ). For example:

Then, when actually applying lighting during rendering of the scene, a given eye space position p is transformed to light-clip space and then mapped to the range [(0, 0, 0), (1, 1, 1)] in order to sample the depth and depth squared values (d, ds) from the shadow map (as with sampling from a projected texture with projective lighting).

As stated previously, the intent of variance shadow mapping is to essentially calculate the probability that a given point is in shadow. A one-tailed variant of Chebyshev's inequality is used to calculate the upper bound u on the probability that, given (d, ds), a given point with depth t is in shadow:

module ShadowVarianceChebyshev0 where

chebyshev :: (Float, Float) -> Float -> Float

chebyshev (d, ds) t =

let p = if t <= d then 1.0 else 0.0

variance = ds - (d * d)

du = t - d

p_max = variance / (variance + (du * du))

in max p p_max

factor :: (Float, Float) -> Float -> Float

factor = chebyshev

One of the improvements suggested to the original variance shadow algorithm is to clamp the minimum variance to some small value (the r2 package uses 0.00002 by default, but this is configurable on a per-shadow basis). The equation above becomes:

module ShadowVarianceChebyshev1 where

data T = T {

minimum_variance :: Float

} deriving (Eq, Show)

chebyshev :: (Float, Float) -> Float -> Float -> Float

chebyshev (d, ds) min_variance t =

let p = if t <= d then 1.0 else 0.0

variance = max (ds - (d * d)) min_variance

du = t - d

p_max = variance / (variance + (du * du))

in max p p_max

factor :: T -> (Float, Float) -> Float -> Float

factor shadow (d, ds) t =

chebyshev (d, ds) (minimum_variance shadow) t

The above is sufficient to give shadows that are roughly equivalent in visual quality to basic shadow mapping with the added benefit of being generally better behaved and with far fewer artifacts. However, the algorithm can suffer from light bleeding, where the penumbrae of overlapping shadows can be unexpectedly bright despite the fact that the entire area should be in shadow. One of the suggested improvements to reduce light bleeding is to modify the upper bound u such that all values below a configurable threshold are mapped to zero, and values above the threshold are rescaled to map them to the range [0, 1]. The original article suggests a linear step function applied to u:

module ShadowVarianceChebyshev2 where

data T = T {

minimum_variance :: Float,

bleed_reduction :: Float

} deriving (Eq, Show)

chebyshev :: (Float, Float) -> Float -> Float -> Float

chebyshev (d, ds) min_variance t =

let p = if t <= d then 1.0 else 0.0

variance = max (ds - (d * d)) min_variance

du = t - d

p_max = variance / (variance + (du * du))

in max p p_max

clamp :: Float -> (Float, Float) -> Float

clamp x (lower, upper) = max (min x upper) lower

linear_step :: Float -> Float -> Float -> Float

linear_step lower upper x = clamp ((x - lower) / (upper - lower)) (0.0, 1.0)

factor :: T -> (Float, Float) -> Float -> Float

factor shadow (d, ds) t =

let u = chebyshev (d, ds) (minimum_variance shadow) t in

linear_step (bleed_reduction shadow) 1.0 u

The amount of light bleed reduction is adjustable on a per-shadow basis.

To reduce problems involving numeric inaccuracy, the original article suggests the use of 32-bit floating point textures in depth variance maps. The r2 package allows 16-bit or 32-bit textures, configurable on a per-shadow basis.

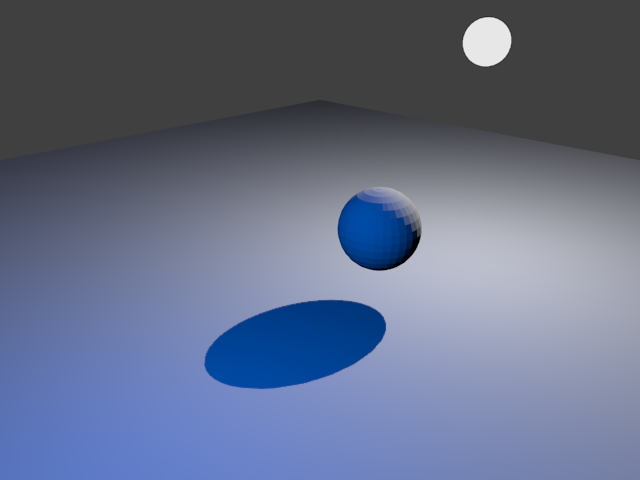

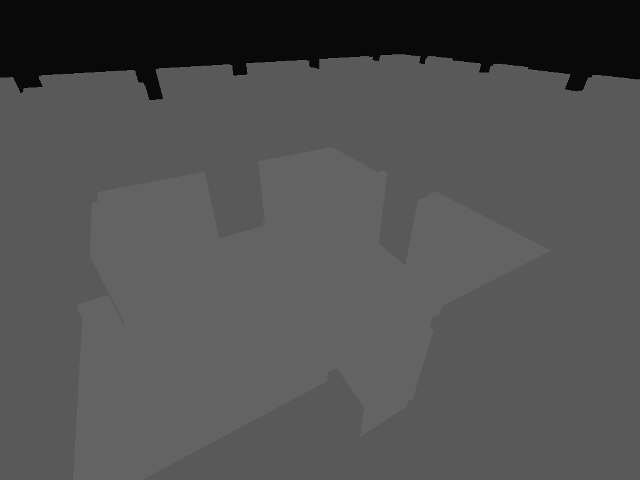

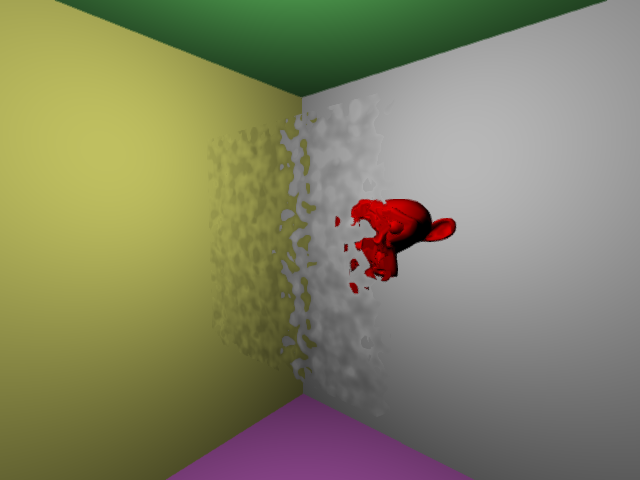

Finally, as mentioned previously, the r2 package allows both optional box blurring and mipmap generation for shadow maps. Both blurring and mipmapping can reduce aliasing artifacts, with the former also allowing the edges of shadows to be significantly softened as a visual effect:

Advantages

The main advantage of variance shadow mapping is that they can essentially be thought of as much better behaved version of basic shadow mapping that just happen to have built-in softening and filtering. Variance shadows typically require far less in the way of scene-specific tuning to get good results.

Disadvantages

One disadvantage of variance shadows is that for large shadow maps, filtering quickly becomes a major bottleneck. On reasonably old hardware such as the Radeon 4670, one 8192x8192 shadow map with two 16-bit components takes too long to filter to give a reliable 60 frames per second rendering rate. Shadow maps of this size are usually used to simulate the influence of the sun over large outdoor scenes.

Types

Variance mapped shadows are represented by the R2ShadowDepthVarianceType type, and can be associated with projective lights.

Rendering of depth-variance images is handled by implementations of the R2ShadowMapRendererType type.

Deferred Rendering

Overview

Deferred rendering is a rendering technique where all of the opaque objects in a given scene are rendered into a series of buffers, and then lighting is applied to those buffers in screen space. This is in contrast to forward rendering, where all lighting is applied to objects as they are rendered.

One major advantage of deferred rendering is a massive reduction in the number of shaders required (traditional forward rendering requires s * l shaders, where s is the number of different object surface types in the scene, and l is the number of different light types). In contrast, deferred rendering requires s + l shaders, because surface and lighting shaders are applied separately.

Traditional forward rendering also suffers severe performance problems as the number of lights in the scene increases, because it is necessary to recompute all of the surface attributes of an object each time a light is applied. In contrast, deferred rendering calculates all surface attributes of all objects once, and then reuses them when lighting is applied.

However, deferred renderers are usually incapable of rendering translucent objects. The deferred renderer in the r2 package is no exception, and a separate set of renderers are provided to render translucent objects.

Due to the size of the subject, the deferred rendering infrastructure in the r2 package is described in several sections. The rendering of opaque geometry is described in the Geometry section, the subsequent lighting of that geometry is described in the Lighting section. The details of the position reconstruction algorithm, an algorithm utterly fundamental to deferred rendering, is described in Position Reconstruction.

Deferred Rendering: Geometry

- 2.19.1. Overview

- 2.19.2. Groups

- 2.19.3. Geometry Buffer

- 2.19.4. Algorithm

- 2.19.5. Ordering/Batching

- 2.19.6. Normal Compression

Overview

The first step in deferred rendering involves rendering all opaque instances in the current scene to a geometry buffer. This populated geometry buffer is then primarily used in later stages to calculate lighting, but can also be used to implement effects such as screen-space ambient occlusion and emission.

In the r2 package, the primary implementation of the deferred geometry rendering algorithm is the R2GeometryRenderer type.

Groups

Groups are a simple means to constrain the contributions of sets of specific light sources to sets of specific rendered instances. Instances and lights are assigned a group number in the range [1, 15]. If the programmer does not explicitly assign a number, the number 1 is assigned automatically. During rendering, the group number of each rendered instance is written to the stencil buffer. Then, when the light contribution is calculated for a light with group number n, only those pixels that have a corresponding value of n in the stencil buffer are allowed to be modified.

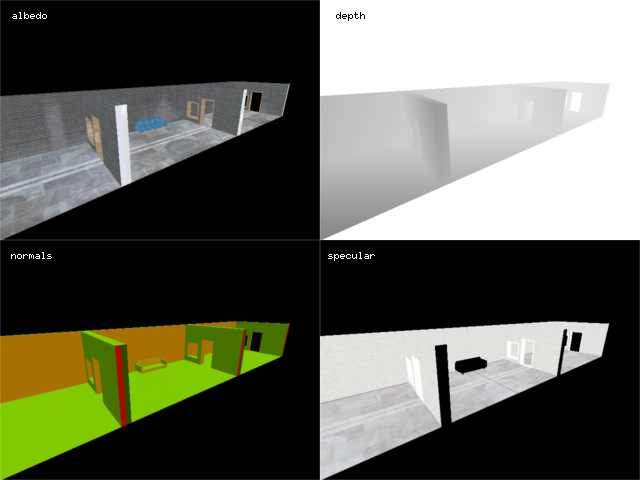

Geometry Buffer

A geometry buffer is a render target in which the surface attributes of objects are stored prior to being combined with the contents of a light buffer to produce a lit image.

One of the main implementation issues in any deferred renderer is deciding which surface attributes (such as position, albedo, normals, etc) to store and which to reconstruct. The more attributes that are stored, the less work is required during rendering to reconstruct those values. However, storing more attributes requires a larger geometry buffer and more memory bandwidth to actually populate that geometry buffer during rendering. The r2 package leans towards having a more compact geometry buffer and doing slightly more reconstruction work during rendering.

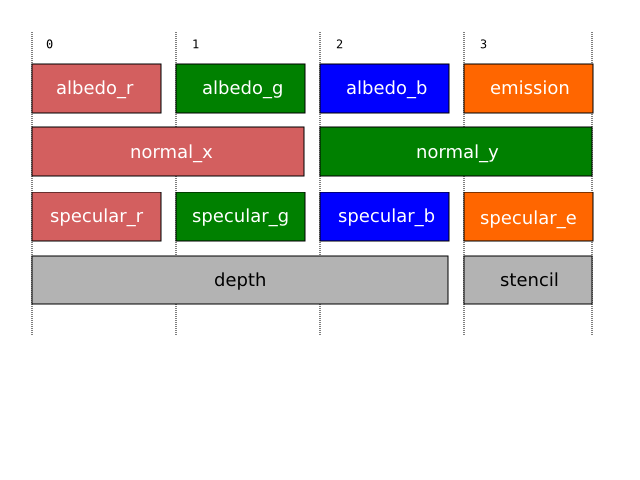

The r2 package explicitly stores the albedo, normals, emission level, and specular color of surfaces. Additionally, the depth buffer is sampled to recover the depth of surfaces. The eye-space positions of surfaces are recovered via an efficient position reconstruction algorithm which uses the current viewing projection and logarithmic depth value as input. In order to reduce the amount of storage required, three-dimensional eye-space normal vectors are stored compressed as two 16 half-precision floating point components via a simple mapping. This means that only 32 bits are required to store the vectors, and very little precision is lost. The precise format of the geometry buffer is as follows:

The albedo_r, albedo_g, and albedo_b components correspond to the red, green, and blue components of the surface, respectively. The emission component refers to the surface emission level. The normal_x and normal_y components correspond to the two components of the compressed surface normal vector. The specular_r, specular_g, and specular_b components correspond to the red, green, and blue components of the surface specularity. Surfaces that will not receive specular highlights simply have 0 for each component. The specular_e component holds the surface specular exponent divided by 256.

In the r2 package, geometry buffers are instances of R2GeometryBufferType.

Algorithm

An informal description of the geometry rendering algorithm as implemented in the r2 package is as follows:

- Set the current render target to a geometry buffer b.

- Enable writing to the depth and stencil buffers, and enable stencil testing. Enable depth testing such that only pixels with a depth less than or equal to the current depth are touched.

- For each group g:

- Configure stencil testing such that only pixels with the allow bit enabled are touched, and configure stencil writing such that the index of g is recorded in the stencil buffer.

- For each instance o in g:

- Render the surface albedo, eye space normals, specular color, and emission level of o into b. Normal mapping is performed during rendering, and if o does not have specular highlights, then a pure black (zero intensity) specular color is written. Effects such as environment mapping are considered to be part of the surface albedo and so are performed in this step.

Ordering/Batching

Due to the use of depth testing, the geometry rendering algorithm is effectively order independent: Instances can be rendered in any order and the final image will always be the same. However, there are efficiency advantages in rendering instances in a particular order. The most efficient order of rendering is the one that minimizes internal OpenGL state changes. NVIDIA's Beyond Porting presentation gives the relative cost of OpenGL state changes, from most expensive to least expensive, as [18]:

- Render target changes: 60,000/second

- Program bindings: 300,000/second

- Texture bindings: 1,500,000/second

- Vertex format (exact cost unspecified)

- UBO bindings (exact cost unspecified)

- Vertex Bindings (exact cost unspecified)

- Uniform Updates: 10,000,000/second

Therefore, it is beneficial to order rendering operations such that the most expensive state changes happen the least frequently.

The R2SceneOpaquesType type provides a simple interface that allows the programmer to specify instances without worrying about ordering concerns. When all instances have been submitted, they will be delivered to a given consumer (typically a geometry renderer) via the opaquesExecute method in the order that would be most efficient for rendering. Typically, this means that instances are first batched by shader, because switching programs is the second most expensive type of render state change. The shader-batched instances are then batched by material, in order to reduce the number of uniform updates that need to occur per shader.

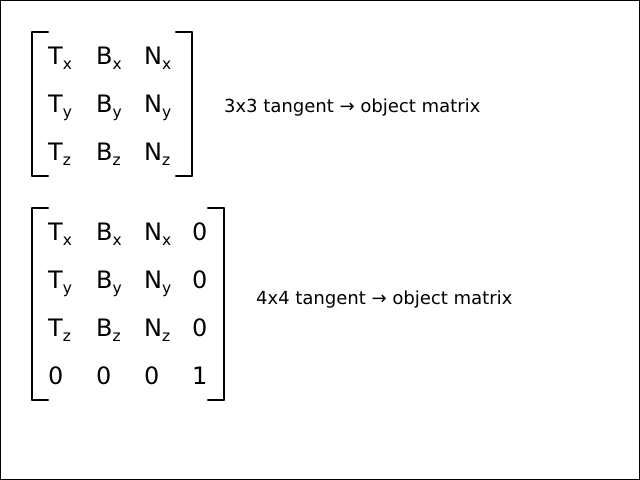

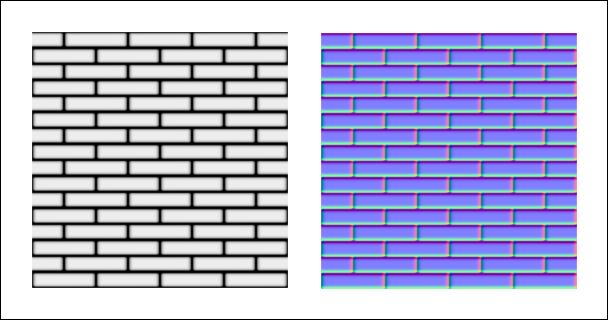

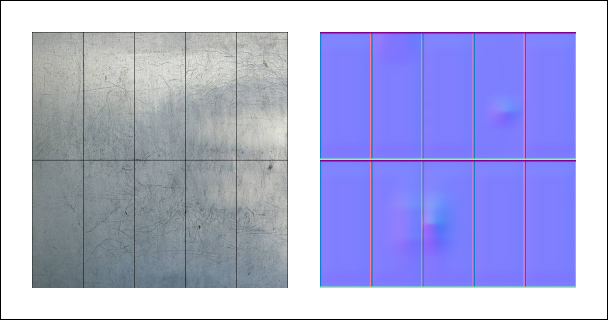

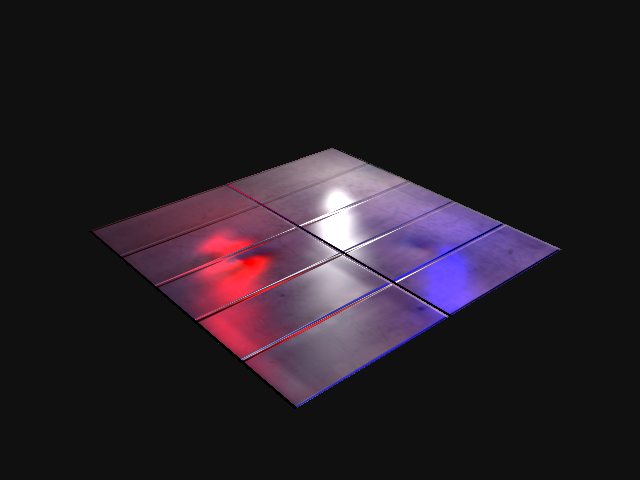

Normal Compression

The r2 package uses a Lambert azimuthal equal-area projection to store surface normal vectors in two components instead of three. This makes use of the fact that normalized vectors represent points on the unit sphere. The mapping from normal vectors to two-dimensional spheremap coordinates is given by compress NormalCompress.hs:

module NormalCompress where

import qualified Vector3f

import qualified Vector2f

import qualified Normal

compress :: Normal.T -> Vector2f.T

compress n =

let p = sqrt ((Vector3f.z n * 8.0) + 8.0)

x = (Vector3f.x n / p) + 0.5

y = (Vector3f.y n / p) + 0.5

in Vector2f.V2 x yThe mapping from two-dimensional spheremap coordinates to normal vectors is given by decompress NormalDecompress.hs:

module NormalDecompress where

import qualified Vector3f

import qualified Vector2f

import qualified Normal

decompress :: Vector2f.T -> Normal.T

decompress v =

let fn = Vector2f.V2 ((Vector2f.x v * 4.0) - 2.0) ((Vector2f.y v * 4.0) - 2.0)

f = Vector2f.dot2 fn fn

g = sqrt (1.0 - (f / 4.0))

x = (Vector2f.x fn) * g

y = (Vector2f.y fn) * g

z = 1.0 - (f / 2.0)

in Vector3f.V3 x y zDeferred Rendering: Lighting

Overview

The second step in deferred rendering involves rendering the light contributions of all light sources within a scene to a light buffer. The rendering algorithm requires sampling from a populated geometry buffer.

Light Buffer

A light buffer is a render target in which the light contributions of all light sources are summed in preparation for being combined with the surface albedo of a geometry buffer to produce a lit image.

A light buffer consists of a 32-bit RGBA diffuse image and a 32-bit RGBA specular image. Currently, the alpha channels of both images are unused and exist solely because OpenGL 3.3 does not provide a color-renderable 24-bit RGB format.

The r2 package offers the ability to disable specular lighting entirely if it is not needed, and so light buffer implementations provide the ability to avoid allocating an image for specular contributions if they will not be calculated.

In the r2 package, light buffers are instances of R2LightBufferType.

Light Clip Volumes

A light clip volume is a means of constraining the contributions of groups of light sources to a provided volume.

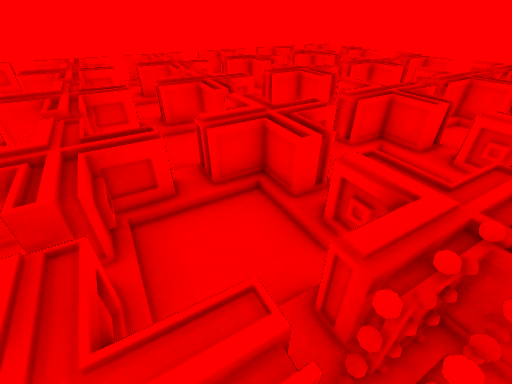

Because, like most renderers, the r2 package implements so-called local illumination, lights that do not have explicit shadow mapping enabled are able to bleed through solid objects:

Enabling shadow mapping for every single light source would be prohibitively expensive [3], but for some scenes, acceptable results can be achieved by simply preventing the light source from affecting pixels outside of a given clip volume.

The technique is implemented using the stencil buffer, using a single light clip volume bit.

- Disable depth writing, and enable depth testing using the standard less-than-or-equal-to depth function is used.

- For each light clip volume v:

- Clear the light clip volume bit in the stencil buffer.

- Configure stencil testing such that the stencil test always passes.

- Configure stencil writing such that:

- Only the light clip volume bit can be written.

- Pixels that fail the depth test will invert the value of the light clip volume bit (GL_INVERT).

- Pixels that pass the depth test leave the value of the light clip volume bit untouched.

- Pixels that pass the stencil test leave the value of the light clip volume bit untouched.

- Render both the front and back faces of v.

- Configure stencil testing such that only those pixels with both the allow bit and light clip volume bit set will be touched.

- Render all of the light sources associated with v.

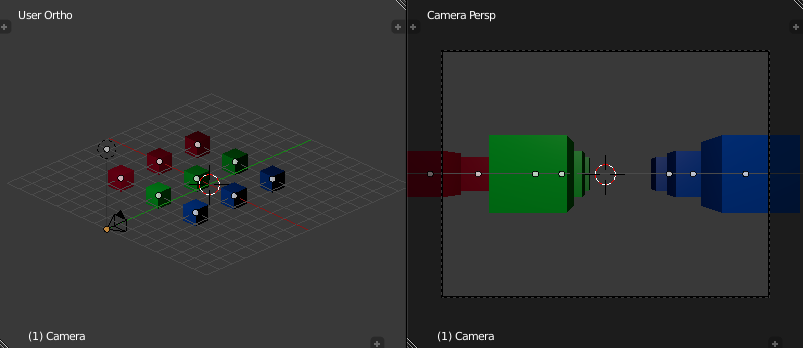

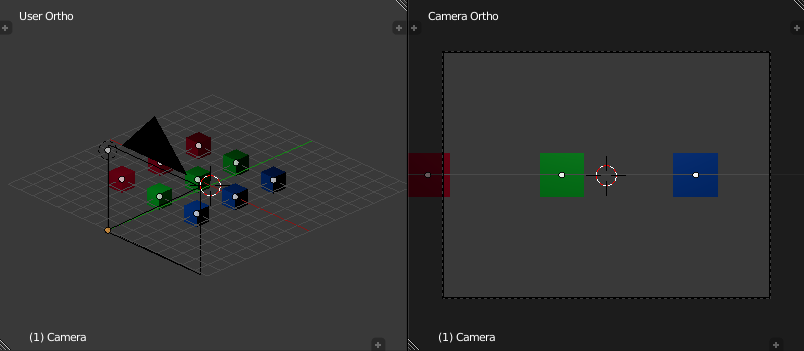

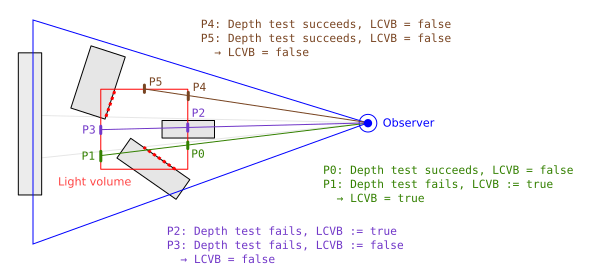

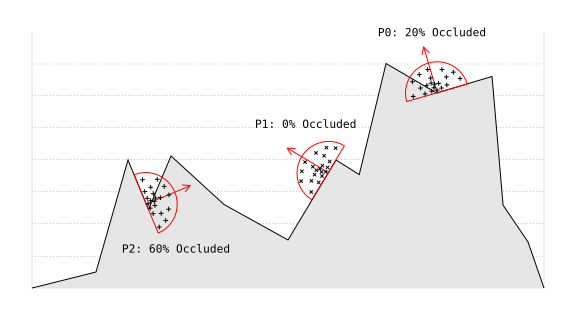

The reason the algorithm works can be inferred from the following diagram:

In the diagram, the grey polygons represent the already-rendered depths of the scene geometry [19]. If a point is inside or behind (from the perspective of the observer) one of the polygons, then the depth of the point is considered to be greater than the scene geometry.

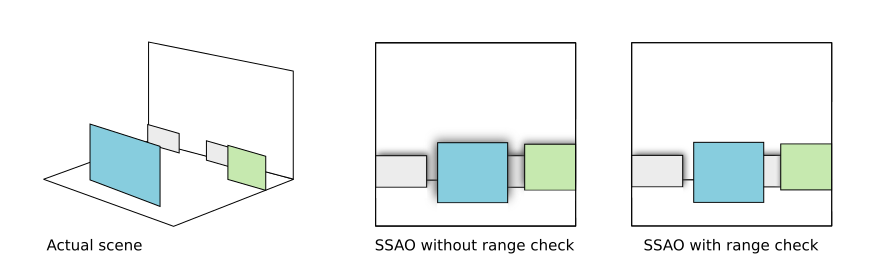

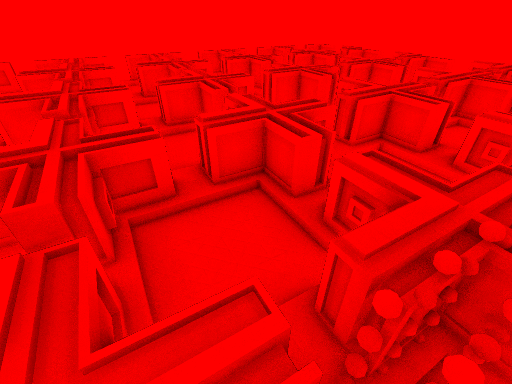

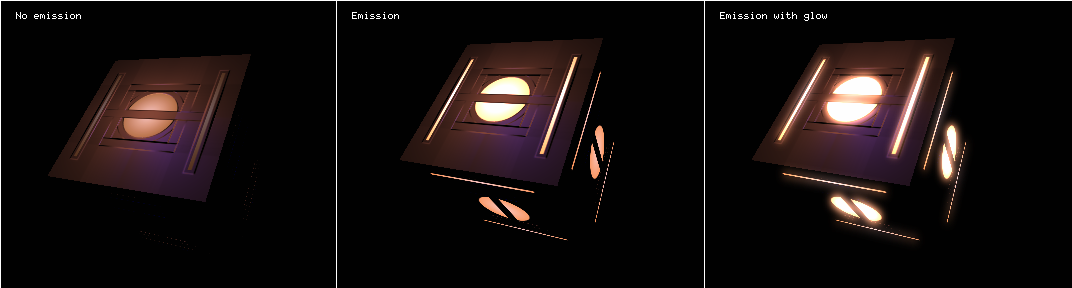

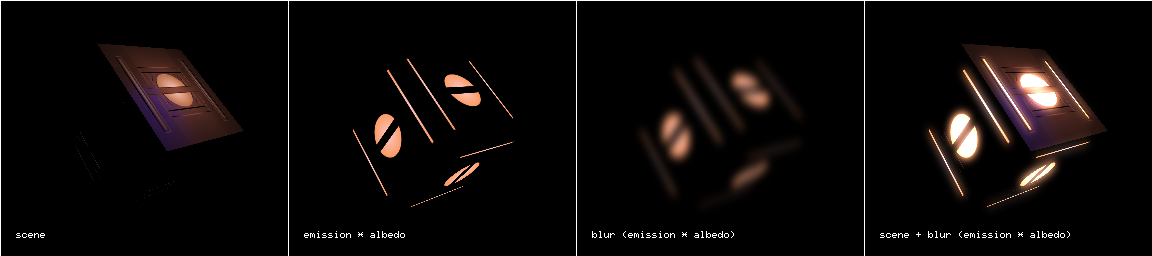

In the diagram, when rendering the front face of the light volume at point P0, the depth of the light volume face at P0 is less than the current scene depth, and so the depth test succeeds and the light clip volume bit is not touched. When rendering the back face of the light volume at point P1, the depth of the light volume face at P1 is greater than the current scene depth so the depth test fails, and the light clip volume bit is inverted, setting it to true. This means that the scene geometry along that view ray is inside the light clip volume.